AP Statistics - Fall Final Exam

... ____ 22. You select one student from this group at random. What is the probability that this student typically ...

... ____ 22. You select one student from this group at random. What is the probability that this student typically ...

PSTAT 120B Probability and Statistics - Week 2

... couple notes about hw1 about #3(6.14): uses transformation method. We can begin with CDF to do the transformation, or set the Jacobian and use the transformation formula. carefully compute integral. this type of problem is very IMPORTANT. Same type of problem came up again in hw2 #1. ...

... couple notes about hw1 about #3(6.14): uses transformation method. We can begin with CDF to do the transformation, or set the Jacobian and use the transformation formula. carefully compute integral. this type of problem is very IMPORTANT. Same type of problem came up again in hw2 #1. ...

The researcher and the consultant: a dialogue on null hypothesis

... true is a mistake known as a type I error. By choosing those particular values of Z to define those two rejection regions and that one acceptance region, we have set our probability of making a type I error in advance, at 5 %. This probability of rejecting the null hypothesis, if it happens to be fa ...

... true is a mistake known as a type I error. By choosing those particular values of Z to define those two rejection regions and that one acceptance region, we have set our probability of making a type I error in advance, at 5 %. This probability of rejecting the null hypothesis, if it happens to be fa ...

Aalborg Universitet Inference in hybrid Bayesian networks

... models, the analyst can employ different sources of information, e.g., historical data or expert judgement. Since both of these sources of information can have low quality, as well as come with a cost, one would like the modelling framework to use the available information as efficiently as possible ...

... models, the analyst can employ different sources of information, e.g., historical data or expert judgement. Since both of these sources of information can have low quality, as well as come with a cost, one would like the modelling framework to use the available information as efficiently as possible ...

Document

... – A phenomenon can be proven to be random (i.e.: obeying laws of statistics) only if we observe infinite cases – F.James et al.: “this definition is not very appealing to a mathematician, since it is based on experimentation, and, in fact, implies unrealizable experiments (N)”. But a physicist ca ...

... – A phenomenon can be proven to be random (i.e.: obeying laws of statistics) only if we observe infinite cases – F.James et al.: “this definition is not very appealing to a mathematician, since it is based on experimentation, and, in fact, implies unrealizable experiments (N)”. But a physicist ca ...

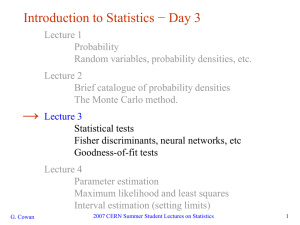

cern_stat_3

... equal or lesser compatibility with H relative to the data we got. This is not the probability that H is true! In frequentist statistics we don’t talk about P(H) (unless H represents a repeatable observation). In Bayesian statistics we do; use Bayes’ theorem to obtain ...

... equal or lesser compatibility with H relative to the data we got. This is not the probability that H is true! In frequentist statistics we don’t talk about P(H) (unless H represents a repeatable observation). In Bayesian statistics we do; use Bayes’ theorem to obtain ...