Analysis of Back Propagation of Neural Network Method in the

... learning mechanism. Information is stored in the weight matrix of a neural network. Learning is the determination of the weights. All learning methods used for adaptive neural networks can be classified into two major categories: supervised learning and unsupervised learning. Supervised learning inc ...

... learning mechanism. Information is stored in the weight matrix of a neural network. Learning is the determination of the weights. All learning methods used for adaptive neural networks can be classified into two major categories: supervised learning and unsupervised learning. Supervised learning inc ...

MS PowerPoint 97 format

... • Selection: propagation of fit individuals (proportionate reproduction, tournament selection) • Crossover: combine individuals to generate new ones • Mutation: stochastic, localized modification to individuals – Simulated annealing: can be defined as genetic algorithm • Selection, mutation only • S ...

... • Selection: propagation of fit individuals (proportionate reproduction, tournament selection) • Crossover: combine individuals to generate new ones • Mutation: stochastic, localized modification to individuals – Simulated annealing: can be defined as genetic algorithm • Selection, mutation only • S ...

LETTER RECOGNITION USING BACKPROPAGATION ALGORITHM

... input/output. A number of learning algorithm designed for the neural network model. In neural network, learning can be either be supervised which is correct output is given during training or unsupervised where no help given. (Negnevitsky, 2005) Types of learning algorithm: i) Supervised Learning. A ...

... input/output. A number of learning algorithm designed for the neural network model. In neural network, learning can be either be supervised which is correct output is given during training or unsupervised where no help given. (Negnevitsky, 2005) Types of learning algorithm: i) Supervised Learning. A ...

Neural Networks - School of Computer Science

... A typical neural network will have several layers an input layer, one or more hidden layers, and a single output layer. In practice no hidden layer: cannot learn non-linear separable one-three layers: more practical use more than five layers: computational expensive ...

... A typical neural network will have several layers an input layer, one or more hidden layers, and a single output layer. In practice no hidden layer: cannot learn non-linear separable one-three layers: more practical use more than five layers: computational expensive ...

Rainfall Prediction with TLBO Optimized ANN *, K Srinivas B Kavitha Rani

... model complexity, these models are often fitted without serious consideration of parameter values, resulting in poor performance during verification1.Another problem with both conceptual and physically-based models is that empirical regularities or periodicities are not always evident and can often ...

... model complexity, these models are often fitted without serious consideration of parameter values, resulting in poor performance during verification1.Another problem with both conceptual and physically-based models is that empirical regularities or periodicities are not always evident and can often ...

Lecture 6

... Supervised learning: teach network explicitly to perform task (e.g. gradient descent algorithm) ...

... Supervised learning: teach network explicitly to perform task (e.g. gradient descent algorithm) ...

... computers. In this paper, perceptron algorithm was used to calculate output variables BMC and BMD using input variables such as age (years), height (m), weight (Kg), resistance (R), and capacitance (Xc). Mathematical equations were developed using input variables. It consists of large number of simp ...

Introduction to ANNs

... clap). A single neuron can only emit a pulse (“fires”) when the total input is above a certain threshold. This characteristic led to the McCulloch and Pitts model (1943) of the artificial neural network (ANN). Glance briefly at Figure 2. which illustrates how learning occurs in a biological neural n ...

... clap). A single neuron can only emit a pulse (“fires”) when the total input is above a certain threshold. This characteristic led to the McCulloch and Pitts model (1943) of the artificial neural network (ANN). Glance briefly at Figure 2. which illustrates how learning occurs in a biological neural n ...

Artificial Intelligence

... part of the Mobile Servicing System on the International Space Station (ISS), and extends the function of this system to replace some ...

... part of the Mobile Servicing System on the International Space Station (ISS), and extends the function of this system to replace some ...

SOLARcief2003

... Calculates its data and control outputs and provides them as inputs to others. Computes statistical information (for example, entropy based information deficiency) in its subspaces. Makes associations with other neurons. ...

... Calculates its data and control outputs and provides them as inputs to others. Computes statistical information (for example, entropy based information deficiency) in its subspaces. Makes associations with other neurons. ...

Artificial Neural Networks

... flows of potassium and sodium ions. This signal is in the form of a pulse (rather like the sound of a hand clap). A single neuron can only emit a pulse (“fires”) when the total input is above a certain threshold. This characteristic led to the McCulloch and Pitts model (1943) of the artificial neura ...

... flows of potassium and sodium ions. This signal is in the form of a pulse (rather like the sound of a hand clap). A single neuron can only emit a pulse (“fires”) when the total input is above a certain threshold. This characteristic led to the McCulloch and Pitts model (1943) of the artificial neura ...

criteria of artificial neural network in reconition of pattern and image

... mapping. The learning process involves updating network architecture and connection weights so that a network can efficiently perform a specific classification/clustering task. The increasing popularity of neural network models to solve pattern recognition problems has been primarily due to their se ...

... mapping. The learning process involves updating network architecture and connection weights so that a network can efficiently perform a specific classification/clustering task. The increasing popularity of neural network models to solve pattern recognition problems has been primarily due to their se ...

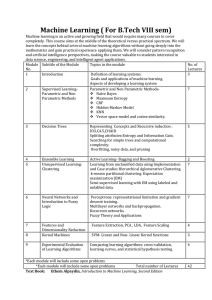

Machine Learning syl..

... Machine learning is an active and growing field that would require many courses to cover completely. This course aims at the middle of the theoretical versus practical spectrum. We will learn the concepts behind several machine learning algorithms without going deeply into the mathematics and gain p ...

... Machine learning is an active and growing field that would require many courses to cover completely. This course aims at the middle of the theoretical versus practical spectrum. We will learn the concepts behind several machine learning algorithms without going deeply into the mathematics and gain p ...

+ w ij ( p)

... The neuron with the largest activation level among all neurons in the output layer becomes the winner. This neuron is the only neuron that produces an output signal. The activity of all other neurons is suppressed in the competition. The lateral feedback connections produce excitatory or inhib ...

... The neuron with the largest activation level among all neurons in the output layer becomes the winner. This neuron is the only neuron that produces an output signal. The activity of all other neurons is suppressed in the competition. The lateral feedback connections produce excitatory or inhib ...

Pattern recognition with Spiking Neural Networks: a simple training

... [4], the second generation being networks such as feedforward networks where neurons apply an “activation function”, and the third generation being networks where neurons use spikes to encode information. From this retrospective, Maass presents the computational advantages of SNNs according to the c ...

... [4], the second generation being networks such as feedforward networks where neurons apply an “activation function”, and the third generation being networks where neurons use spikes to encode information. From this retrospective, Maass presents the computational advantages of SNNs according to the c ...

RNI_Introduction - Cognitive and Linguistic Sciences

... correspond in any sense to single neurons or groups of neurons. Physiology (fMRI) suggests that any complex cognitive structure – a word, for instance – gives rise to widely distributed cortical activation. Therefore a node in a language-based network like WordNet corresponds to a very complex neura ...

... correspond in any sense to single neurons or groups of neurons. Physiology (fMRI) suggests that any complex cognitive structure – a word, for instance – gives rise to widely distributed cortical activation. Therefore a node in a language-based network like WordNet corresponds to a very complex neura ...

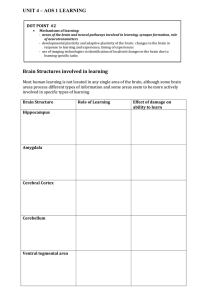

Catastrophic interference

Catastrophic Interference, also known as catastrophic forgetting, is the tendency of a artificial neural network to completely and abruptly forget previously learned information upon learning new information. Neural networks are an important part of the network approach and connectionist approach to cognitive science. These networks use computer simulations to try and model human behaviours, such as memory and learning. Catastrophic interference is an important issue to consider when creating connectionist models of memory. It was originally brought to the attention of the scientific community by research from McCloskey and Cohen (1989), and Ractcliff (1990). It is a radical manifestation of the ‘sensitivity-stability’ dilemma or the ‘stability-plasticity’ dilemma. Specifically, these problems refer to the issue of being able to make an artificial neural network that is sensitive to, but not disrupted by, new information. Lookup tables and connectionist networks lie on the opposite sides of the stability plasticity spectrum. The former remains completely stable in the presence of new information but lacks the ability to generalize, i.e. infer general principles, from new inputs. On the other hand, connectionst networks like the standard backpropagation network are very sensitive to new information and can generalize on new inputs. Backpropagation models can be considered good models of human memory insofar as they mirror the human ability to generalize but these networks often exhibit less stability than human memory. Notably, these backpropagation networks are susceptible to catastrophic interference. This is considered an issue when attempting to model human memory because, unlike these networks, humans typically do not show catastrophic forgetting. Thus, the issue of catastrophic interference must be eradicated from these backpropagation models in order to enhance the plausibility as models of human memory.