An Algorithm for Fast Convergence in Training Neural Networks

... Although the Error Backpropagation algorithm (EBP) [1][2][3] has been a significant milestone in neural network research area of interest, it has been known as an algorithm with a very poor convergence rate. Many attempts have been made to speed up the EBP algorithm. Commonly known heuristic approac ...

... Although the Error Backpropagation algorithm (EBP) [1][2][3] has been a significant milestone in neural network research area of interest, it has been known as an algorithm with a very poor convergence rate. Many attempts have been made to speed up the EBP algorithm. Commonly known heuristic approac ...

Metody Inteligencji Obliczeniowej

... • no need for vector spaces (structured objects), • more general than fuzzy approach (F-rules are reduced to P-rules), • includes kNN, MLPs, RBFs, separable function networks, SVMs, kernel methods and many others! Components => Models; systematic search selects optimal combination of parameters and ...

... • no need for vector spaces (structured objects), • more general than fuzzy approach (F-rules are reduced to P-rules), • includes kNN, MLPs, RBFs, separable function networks, SVMs, kernel methods and many others! Components => Models; systematic search selects optimal combination of parameters and ...

Learning of Compositional Hierarchies By Data-Driven Chunking Karl Pfleger

... discrete, finite alphabet, and where the data is potentially unbounded in both directions, not organized into a set of strings with definite beginnings and ends. Unlike some chunking systems, our chunking is purely data-driven in the sense that only the data, and its underlying statistical regularit ...

... discrete, finite alphabet, and where the data is potentially unbounded in both directions, not organized into a set of strings with definite beginnings and ends. Unlike some chunking systems, our chunking is purely data-driven in the sense that only the data, and its underlying statistical regularit ...

fgdfgdf - 哈尔滨工业大学个人主页

... The intermediate layers are referred to as hidden layers because they are not connected directly to either the inputs or outputs. Prof. K. Li ...

... The intermediate layers are referred to as hidden layers because they are not connected directly to either the inputs or outputs. Prof. K. Li ...

Learning Predictive Categories Using Lifted Relational

... dataset5 which describes 50 animals in terms of 85 Boolean features, such as fish, large, smelly, strong, and timid. This dataset was originally crated in [7], and was used among others for evaluating a related learning task in [5]. For both objects and properties, we have used two levels of categor ...

... dataset5 which describes 50 animals in terms of 85 Boolean features, such as fish, large, smelly, strong, and timid. This dataset was originally crated in [7], and was used among others for evaluating a related learning task in [5]. For both objects and properties, we have used two levels of categor ...

Artificial Neural Networks and Near Infrared Spectroscopy

... laboratory reference values and the predicted values. In a linear model we estimate 100 regression coefficients plus an offset - in total 101 coefficients. In ANN modeling the estimation part is more complex; in the case presented we need 100 x 3 neurons (w’s) + 3 x1 (v’s) (excluding biases) besides ...

... laboratory reference values and the predicted values. In a linear model we estimate 100 regression coefficients plus an offset - in total 101 coefficients. In ANN modeling the estimation part is more complex; in the case presented we need 100 x 3 neurons (w’s) + 3 x1 (v’s) (excluding biases) besides ...

B42010712

... missing values (trained network has the capability of correctly filling the value without affecting the prediction); neural networks act as an universal approximation for an arbitrary continuous function with arbitrary precision. ...

... missing values (trained network has the capability of correctly filling the value without affecting the prediction); neural networks act as an universal approximation for an arbitrary continuous function with arbitrary precision. ...

Neural Networks and Fuzzy Logic Systems

... JAWAHARLAL NEHRU TECHNOLOGICAL UNIVERSITY HYDERABAD IV Year B.Tech. M.E. II-Sem T P C ...

... JAWAHARLAL NEHRU TECHNOLOGICAL UNIVERSITY HYDERABAD IV Year B.Tech. M.E. II-Sem T P C ...

Cognitive Neuroscience

... Which mechanisms? Analogies with computers? RAM, CPU? Logic? Those are poor analogies. ...

... Which mechanisms? Analogies with computers? RAM, CPU? Logic? Those are poor analogies. ...

Artificial intelligence neural computing and

... of outputs. Various methods to set the strengths of the connections exist. One way is to set the weights explicitly, using a priori knowledge. Another way is to train the neural network by feeding it teaching patterns and letting it change its weights according to some learning rule. The learning si ...

... of outputs. Various methods to set the strengths of the connections exist. One way is to set the weights explicitly, using a priori knowledge. Another way is to train the neural network by feeding it teaching patterns and letting it change its weights according to some learning rule. The learning si ...

A Project on Gesture Recognition with Neural Networks for

... to recognize user gestures for computer games. Our goals are two-fold: First, we want students to recognize that neural networks are a powerful and practical techniques for solving complex real-world problems, such as gesture recognition. Second, we want the students to understand both neural networ ...

... to recognize user gestures for computer games. Our goals are two-fold: First, we want students to recognize that neural networks are a powerful and practical techniques for solving complex real-world problems, such as gesture recognition. Second, we want the students to understand both neural networ ...

State graph

... The first intelligent behavior required by the puzzle-solving machine is the extraction of information through a visual medium. Unlike photographing an image, the problem is to understand the image (Computer Vision) –the ability to perceive. Since the possible images are finite, the machine can mere ...

... The first intelligent behavior required by the puzzle-solving machine is the extraction of information through a visual medium. Unlike photographing an image, the problem is to understand the image (Computer Vision) –the ability to perceive. Since the possible images are finite, the machine can mere ...

Artificial Neural Networks - Computer Science, Stony Brook University

... [9]http://cs.stanford.edu/people/eroberts/courses/soco/projects/neural-networks/History/history1.html [10] http://psych.utoronto.ca/users/reingold/courses/ai/cache/neural4.html [11] http://www.alyuda.com/products/forecaster/neural-network-applications.htm ...

... [9]http://cs.stanford.edu/people/eroberts/courses/soco/projects/neural-networks/History/history1.html [10] http://psych.utoronto.ca/users/reingold/courses/ai/cache/neural4.html [11] http://www.alyuda.com/products/forecaster/neural-network-applications.htm ...

Decision making with support of artificial intelligence

... It is obvious that application of the exact methods for finding the solution is preferred. However, in some situations the exact solution by using the conventional tools and methods is complicated. For example, for several logical tasks it is more effective to apply logical programming tools, freque ...

... It is obvious that application of the exact methods for finding the solution is preferred. However, in some situations the exact solution by using the conventional tools and methods is complicated. For example, for several logical tasks it is more effective to apply logical programming tools, freque ...

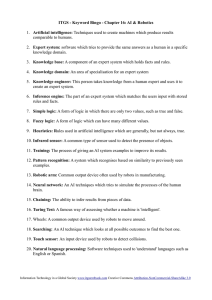

teacher clues - ITGS Textbook

... 6. Inference engine: The part of an expert system which matches the users input with stored rules and facts. 7. Simple logic: A form of logic in which there are only two values, such as true and false. 8. Fuzzy logic: A form of logic which can have many different values. 9. Heuristics: Rules used in ...

... 6. Inference engine: The part of an expert system which matches the users input with stored rules and facts. 7. Simple logic: A form of logic in which there are only two values, such as true and false. 8. Fuzzy logic: A form of logic which can have many different values. 9. Heuristics: Rules used in ...

Catastrophic interference

Catastrophic Interference, also known as catastrophic forgetting, is the tendency of a artificial neural network to completely and abruptly forget previously learned information upon learning new information. Neural networks are an important part of the network approach and connectionist approach to cognitive science. These networks use computer simulations to try and model human behaviours, such as memory and learning. Catastrophic interference is an important issue to consider when creating connectionist models of memory. It was originally brought to the attention of the scientific community by research from McCloskey and Cohen (1989), and Ractcliff (1990). It is a radical manifestation of the ‘sensitivity-stability’ dilemma or the ‘stability-plasticity’ dilemma. Specifically, these problems refer to the issue of being able to make an artificial neural network that is sensitive to, but not disrupted by, new information. Lookup tables and connectionist networks lie on the opposite sides of the stability plasticity spectrum. The former remains completely stable in the presence of new information but lacks the ability to generalize, i.e. infer general principles, from new inputs. On the other hand, connectionst networks like the standard backpropagation network are very sensitive to new information and can generalize on new inputs. Backpropagation models can be considered good models of human memory insofar as they mirror the human ability to generalize but these networks often exhibit less stability than human memory. Notably, these backpropagation networks are susceptible to catastrophic interference. This is considered an issue when attempting to model human memory because, unlike these networks, humans typically do not show catastrophic forgetting. Thus, the issue of catastrophic interference must be eradicated from these backpropagation models in order to enhance the plausibility as models of human memory.