* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download + v

Cross product wikipedia , lookup

Rotation matrix wikipedia , lookup

Linear least squares (mathematics) wikipedia , lookup

Exterior algebra wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

Determinant wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Jordan normal form wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Euclidean vector wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Vector space wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Gaussian elimination wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Matrix multiplication wikipedia , lookup

System of linear equations wikipedia , lookup

Review on Linear Algebra

Spring 2017

Instructor: Tai-Yue (Jason) Wang

Department of Industrial and Information Management

Institute of Information Management

Contents

Introduction to System of Linear Equations,

Matrices, and Matrix Operations

Euclidean Vector Spaces

General Vector Spaces

Inner Product Spaces

Eigenvalue and Eigenvector

2

Introduction to System of

Linear Equations, Matrices,

and Matrix Operations

Linear Equations

Any straight line in xy-plane can be represented

algebraically by an equation of the form:

a1x + a2y = b

General form: Define a linear equation in the n

variables x1, x2, …, xn :

a1x1 + a2x2 + ··· + anxn = b

where a1, a2, …, an and b are real constants.

The variables in a linear equation are sometimes

called unknowns.

4

Example (Linear Equations)

The equations

are linear

1

x 3 y 7, y x 3 z 1, and x1 2 x2 3x3 x4 7

2

A linear equation does not involve any products or

roots of variables

All variables occur only to the first power and do not

appear as arguments for trigonometric, logarithmic,

or exponential functions.

The equations x 3 y 5, 3x 2 y z xz 4, and y sin x

are not linear (non-linear)

5

Example (Linear Equations)

A solution of a linear equation is a sequence of n

numbers s1, s2, …, sn such that the equation is

satisfied.

The set of all solutions of the equation is called its

solution set or general solution of the equation.

6

a11x1 a12 x2 ... a1n xn b1

a21x1 a22 x2 ... a2 n xn b2

Linear Systems

am1 x1 am 2 x2 ... amn xn bm

A finite set of linear equations in the variables x1,

x2, …, xn is called a system of linear equations or

a linear system.

A sequence of numbers s1, s2, …, sn is called a

solution of the system

A system has no solution is said to be inconsistent.

If there is at least one solution of the system, it is

called consistent.

Every system of linear equations has either no

solutions, exactly one solution, or infinitely many

7

solutions

Augmented Matrices

The location of the +s, the xs, and the =s can

be abbreviated by writing only the rectangular

array of numbers.

This is called the augmented matrix (擴增矩陣)

for the system.

It must be written in the same order in each

equation as the unknowns and the constants

must be on the right

8

Augmented Matrices

In computer science

an array is a data

structure consisting of

a group of elements

that are accessed by

indexing.

It must be written in the same order in each

equation as the unknowns and the constants

must be on the right

1st column

a11x1 a12 x2 ... a1n xn b1

a21 x1 a22 x2 ... a2 n xn b2

am1 x1 am 2 x2 ... amn xn bm

a11 a12 ... a1n

a a ... a

2n

21 22

am1 am 2 ... amn

Matrix

b1

b2

bm

1st row

9

Homogeneous(齊次) Linear

Systems

A system of linear equations is said to be

homogeneous if the constant terms are all

zero; that is, the system has the form:

a11x1 a12 x2 ... a1n xn 0

a21x1 a22 x2 ... a2 n xn 0

am1 x1 am 2 x2 ... amn xn 0

10

Homogeneous Linear Systems

Every homogeneous system of linear equation is

consistent, since all such system have x1 = 0, x2

= 0, …, xn = 0 as a solution.

This solution is called the trivial solution(零解).

If there are another solutions, they are called nontrivial

solutions(非零解).

There are only two possibilities for its solutions:

There is only the trivial solution

There are infinitely many solutions in addition to the trivial

solution

11

Theorem

Theorem 1

A homogeneous system of linear equations with more

unknowns than equations has infinitely many solutions.

Remark

This theorem applies only to homogeneous system!

A nonhomogeneous system with more unknowns than

equations need not be consistent; however, if the system

is consistent, it will have infinitely many solutions.

e.g., two parallel planes in 3-space

12

Definition and Notation

A matrix is a rectangular array of numbers. The numbers in

the array are called the entries in the matrix

A general mn matrix A is denoted as

a11 a12 ...

a

a22 ...

21

A

am1 am 2 ...

a1n

a2 n

amn

13

Definition and Notation

The entry that occurs in row i and column j of matrix A will

be denoted aij or Aij. If aij is real number, it is common to

be referred as scalars

The preceding matrix can be written as [aij]mn or [aij]

A matrix A with n rows and n columns is called a square

matrix of order n

14

Definition

Two matrices are defined to be equal if they have the

same size and their corresponding entries are equal

If A = [aij] and B = [bij] have the same size, then A

= B if and only if aij = bij for all i and j

If A and B are matrices of the same size, then the sum

A + B is the matrix obtained by adding the entries of

B to the corresponding entries of A.

15

Definition

The difference A – B is the matrix obtained by

subtracting the entries of B from the corresponding

entries of A

If A is any matrix and c is any scalar, then the product

cA is the matrix obtained by multiplying each entry of

the matrix A by c. The matrix cA is said to be the scalar

multiple of A

If A = [aij], then cAij = cAij = caij

16

Definitions

If A is an mr matrix and B is an rn matrix, then

the product AB is the mn matrix whose entries

are determined as follows.

To find the entry in row i and column j of AB,

single out row i from the matrix A and column j

from the matrix B. Multiply the corresponding

entries from the row and column together and then

add up the resulting products

17

Definitions

That is, (AB)mn = Amr Brn

a11

a

21

AB

ai1

am1

a12

a22

ai 2

am 2

a1r

a2 r b11 b12 b1 j b1n

b21 b22 b2 j b2 n

air

b

b

b

b

r1 r 2

rj

rn

amr

the entry ABij in row i and column j of AB is given by

ABij = ai1b1j + ai2b2j + ai3b3j + … + airbrj

18

Partitioned Matrices

A matrix can be subdivided or partitioned into smaller

matrices by inserting horizontal and vertical rules

between selected rows and columns

19

Partitioned Matrices

For example, three possible partitions of a 34 matrix A:

The partition of A into four

a

a

a

a

submatrices A11, A12, A21,

A

A

A a

a

a

a

A

A

and A22

a

a

a

a

a

a

a r

a

The partition of A into its row

A a

a

a

a r

matrices r1, r2, and r3

a

a

a

a r

The partition of A into its

a

a

a

a

a

c c c

A

a

a

a

column matrices c1, c2, c3,

a

a

a

a

and c4

11

12

13

14

21

22

23

24

11

12

21

22

31

32

33

34

11

12

13

14

1

21

22

23

24

2

31

32

33

34

3

11

12

13

14

21

22

23

24

31

32

33

34

1

2

3

20

c4

Multiplication by Columns and by

Rows

It is possible to compute a particular row or

column of a matrix product AB without

computing the entire product:

jth column matrix of AB = A[jth column matrix of B]

ith row matrix of AB = [ith row matrix of A]B

21

Multiplication by Columns and by

Rows

If a1, a2, ..., am denote the row matrices of

A and b1 ,b2, ...,bn denote the column

matrices of B, then

AB Ab1 b 2 b n Ab1 Ab 2 Ab n

a1

a1 B

a

a B

AB 2 B 2

a m

a m B

22

Matrix Products as Linear

Combinations

Let

Then

The product Ax of a matrix A with a column

matrix x is a linear combination of the column

matrices of A with the coefficients coming from the

23

matrix x

a11 a12 a1n

a

a

a

21

22

2

n

and

A

am1 am 2 amn

x1

x

x 2

xn

a11x1 a12 x2 a1n xn

a11

a12

a1n

a x a x a x

a

a

a

21

1

22

2

2

n

n

21

22

x x x 2n

Ax

n

1 2

am1 x1 am 2 x2 amn xn

am1

am 2

amn

Matrix Form of a Linear System

Consider any system of m linear equations in n unknowns:

a11x1 a12 x2 a1n xn b1

a21 x1 a22 x2 a2 n xn b2

am1 x1 am 2 x2 amn xn bn

a11x1 a12 x2 a1n xn b1

a x a x a x b

2n n

21 1 22 2

2

a

x

a

x

a

x

m

1

1

m

2

2

mn

n

bm

a11 a12 a1n x1 b1

a

x b

a

a

21

22

2

n

2 2

a

a

a

m

1

m

2

mn

xm bm

Ax b

The matrix A is called the coefficient matrix of the system

The augmented matrix of the system is given abya a b

11

12

1n

1

a

a

a

b

21

22

2

n

2

A b

24

am1 am 2 amn bm

Definitions

If A is any mn matrix, then the transpose of A,

denoted by AT, is defined to be the nm matrix

that results from interchanging the rows and

columns of A

That is, the first column of AT is the first row of A,

the second column of AT is the second row of A,

and so forth

25

Definitions

If A is a square matrix, then the trace of A ,

denoted by tr(A), is defined to be the sum of the

entries on the main diagonal of A. The trace of

A is undefined if A is not a square matrix.

For an nn matrix A = [aij],

n

tr ( A) aii

i 1

26

Properties of Matrix Operations

For real numbers a and b ,we always have ab = ba,

which is called the commutative law for

multiplication. For matrices, however, AB and BA

need not be equal.

Equality can fail to hold for three reasons:

The product AB is defined but BA is undefined.

AB and BA are both defined but have different sizes.

It is possible to have AB BA even if both AB and

27

BA are defined and have the same size.

Theorem 2

(Properties of Matrix Arithmetic)

Assuming that the sizes of the matrices are such that the

indicated operations can be performed, the following rules

of matrix arithmetic are valid:

A+B=B+A

(commutative law for addition)

A + (B + C) = (A + B) + C (associative law for addition)

A(BC) = (AB)C

(associative law for multiplication)

A(B + C) = AB + AC (left distributive law)

(B + C)A = BA + CA (right distributive law)

A(B – C) = AB – AC,

(B – C)A = BA – CA

a(B + C) = aB + aC,

a(B – C) = aB – aC

28

Theorem 2

(Properties of Matrix Arithmetic)

(a+b)C = aC + bC,

a(bC) = (ab)C,

(a-b)C = aC – bC

a(BC) = (aB)C = B(aC)

29

Zero Matrices

A matrix, all of whose entries are zero, is called a

zero matrix

A zero matrix will be denoted by 0

If it is important to emphasize the size, we shall

write 0mn for the mn zero matrix.

In keeping with our convention of using boldface

symbols for matrices with one column, we will

denote a zero matrix with one column by 0

30

Zero Matrices

Theorem 3 (Properties of Zero Matrices)

Assuming that the sizes of the matrices are such

that the indicated operations can be

performed ,the following rules of matrix

arithmetic are valid

A+0=0+A=A

A–A=0

0 – A = -A

A0 = 0;

0A = 0

31

Identity Matrices

A square matrix with 1s on the main diagonal

and 0s off the main diagonal is called an

identity matrix and is denoted by I, or In for

the nn identity matrix

If A is an mn matrix, then AIn = A and ImA = A

An identity matrix plays the same role in matrix

arithmetic as the number 1 plays in the

numerical relationships a·1 = 1·a = a

32

Definition

If A is a square matrix, and if a matrix B of the

same size can be found such that AB = BA = I,

then A is said to be invertible and B is called an

inverse of A. If no such matrix B can be found,

then A is said to be singular.

Remark:

The inverse of A is denoted as A-1

Not every (square) matrix has an inverse

An inverse matrix has exactly one inverse

33

Theorems

Theorem 4

If B and C are both inverses of the matrix A, then B = C

Theorem 5

The matrix

a b

A

c d

is invertible if ad – bc 0, in which case the inverse is

given by the formula

A1

1 d b

ad bc c a

34

Theorems

Theorem 6

If A and B are invertible matrices of the same

size ,then AB is invertible and (AB)-1 = B-1A-1

35

Definition

If A is a square matrix, then we define the

nonnegative integer powers of A to be

A0 I

An

AA

A (n 0)

n factors

If A is invertible, then we define the

negative integer powers to be

1 1

1

A n ( A1 ) n

A

A

A

(n 0)

n factors

36

Theorems

Theorem 7 (Laws of Exponents)

If A is a square matrix and r and s are integers,

then ArAs = Ar+s, (Ar)s = Ars

Theorem 8 (Laws of Exponents)

If A is an invertible matrix, then:

A-1 is invertible and (A-1)-1 = A

An is invertible and (An)-1 = (A-1)n for n = 0, 1, 2, …

For any nonzero scalar k, the matrix kA is invertible

and (kA)-1 = (1/k)A-1

37

Theorems

Theorem 9 (Properties of the Transpose)

If the sizes of the matrices are such that the stated

operations can be performed, then

((AT)T = A

(A + B)T = AT + BT and (A – B)T = AT – BT

(kA)T = kAT, where k is any scalar

(AB)T = BTAT

Theorem 10 (Invertibility of a Transpose)

If A is an invertible matrix, then AT is also invertible

and (AT)-1 = (A-1)T

38

Theorems

Theorem 11

Every system of linear equations has either no

solutions, exactly one solution, or in finitely

many solutions.

Theorem 12

If A is an invertible nn matrix, then for each

n1 matrix b, the system of equations Ax = b

has exactly one solution, namely, x = A-1b.

39

Example

40

Theorems

Theorem 13

Let A be a square matrix

If B is a square matrix satisfying BA = I, then B = A-1

If B is a square matrix satisfying AB = I, then B = A-1

Theorem 14

Let A and B be square matrices of the same size. If

AB is invertible, then A and B must also be

invertible.

41

Definitions

A square matrix A is mn with m = n; the (i,j)-entries

for 1 i m form the main diagonal of A

A diagonal matrix is a square matrix all of whose

entries not on the main diagonal equal zero. By

diag(d1, …, dm) is meant the mm diagonal matrix

whose (i,i)-entry equals di for 1 i m

42

Definitions

A mn lower-triangular matrix L satisfies (L)ij = 0 if i <

j, for 1 i m and 1 j n

A mn upper-triangular matrix U satisfies (U)ij = 0 if i

> j, for 1 i m and 1 j n

A unit-lower (or –upper)-triangular matrix T is a lower

(or upper)-triangular matrix satisfying (T)ii = 1 for 1 i

min(m,n)

43

Properties of Diagonal Matrices

A general nn diagonal

matrix D can be written as

A diagonal matrix is

invertible if and only if all

of its diagonal entries are

nonzero

Powers of diagonal

matrices are easy to

compute

d1 0 0

0 d 0

2

D

0

0

d

n

0

0

1 / d1

0 1/ d

0

2

D 1

0

0

1

/

d

n

d1k

0

Dk

0

0

0

d nk

0

d 2k

0

44

Properties of Diagonal Matrices

Matrix products that involve diagonal

factors are especially easy to compute

45

Theorem

The transpose of a lower triangular matrix is

upper triangular, and the transpose of an

upper triangular matrix is lower triangular

The product of lower triangular matrices is

lower triangular, and the product of upper

triangular matrices is upper triangular

46

Theorem

A triangular matrix is invertible if and only

if its diagonal entries are all nonzero

The inverse of an invertible lower

triangular matrix is lower triangular, and

the inverse of an invertible upper triangular

matrix is upper triangular

47

Symmetric Matrices

Definition

A (square) matrix A for which AT = A, so that

Aij = Aji for all i and j, is said to be

symmetric.

Theorem 17

If A and B are symmetric matrices with the

same size, and if k is any scalar, then

AT is symmetric

A + B and A – B are symmetric

kA is symmetric

48

Symmetric Matrices

Remark

The product of two symmetric matrices is

symmetric if and only if the matrices

commute, i.e., AB = BA

49

Theorems

Theorem 18

If A is an invertible symmetric matrix, then A-1

is symmetric.

Remark:

In general, a symmetric matrix needs not be

invertible.

The products AAT and ATA are always

symmetric

50

Theorems

Theorem 19

If A is an invertible matrix, then AAT and ATA

are also invertible

51

Example

52

Euclidean Vector Spaces

Definitions

If n is a positive integer, an ordered n-tuple

(vector) is a sequence of n real numbers

(a1,a2,…,an). The set of all ordered n-tuple

is called n-space and is denoted by Rn.

54

Definitions

Two vectors u = (u1 ,u2 ,…,un) and v = (v1 ,v2 ,…,

vn) in Rn are called equal if

u1 = v1 ,u2 = v2 , …, un = vn

The sum u + v is defined by

u + v = (u1+v1 , u1+v1 , …, un+vn)

and if k is any scalar, the scalar multiple ku is

defined by

ku = (ku1 ,ku2 ,…,kun)

55

Remarks

The operations of addition and scalar

multiplication in this definition are called the

standard operations on Rn.

The zero vector in Rn is denoted by 0 and is

defined to be the vector 0 = (0, 0, …, 0).

56

Remarks

If u = (u1 ,u2 ,…,un) is any vector in Rn, then the

negative (or additive inverse) of u is denoted by

-u and is defined by -u = (-u1 ,-u2 ,…,-un).

The difference of vectors in Rn is defined by

v – u = v + (-u) = (v1 – u1 ,v2 – u2 ,…,vn – un)

57

Theorem (Properties of Vector in Rn)

If u = (u1 ,u2 ,…,un), v = (v1 ,v2 ,…, vn), and

w = (w1 ,w2 ,…, wn) are vectors in Rn and k

and l are scalars, then:

u+v=v+u

u + (v + w) = (u + v) + w

u+0=0+u=u

u + (-u) = 0; that is u – u = 0

58

Theorem (Properties of Vector in Rn)

k(lu) = (kl)u

k(u + v) = ku + kv

(k+l)u = ku+lu

1u = u

59

Euclidean Inner Product

Definition

If u = (u1 ,u2 ,…,un), v = (v1 ,v2 ,…, vn) are

vectors in Rn, then the Euclidean inner product

u · v is defined by

u · v = u1 v1 + u2 v2 + … + un vn

60

Euclidean Inner Product

Example

The Euclidean inner product of the vectors

u = (-1,3,5,7) and v = (5,-4,7,0) in R4 is

u · v = (-1)(5) + (3)(-4) + (5)(7) + (7)(0) = 18

61

Properties of Euclidean Inner

Product

Theorem 22

If u, v and w are vectors in Rn and k is any scalar,

then

u·v=v·u

(u + v) · w = u · w + v · w

(k u) · v = k(u · v)

v · v ≥ 0; Further, v · v = 0 if and only if v = 0

62

Properties of Euclidean Inner

Product

Example

(3u + 2v) · (4u + v)

= (3u) · (4u + v) + (2v) · (4u + v )

= (3u) · (4u) + (3u) · v + (2v) · (4u) + (2v) · v

=12(u · u) + 11(u · v) + 2(v · v)

63

Norm and Distance in Euclidean nSpace

We define the Euclidean norm (or Euclidean

length) of a vector u = (u1 ,u2 ,…,un) in Rn by

u (u u)1/ 2 u12 u22 ... un2

Similarly, the Euclidean distance between the

points u = (u1 ,u2 ,…,un) and v = (v1 , v2 ,…,vn)

in Rn is defined by

d (u, v) u v (u1 v1 ) 2 (u2 v2 ) 2 ... (un vn ) 2

64

Norm and Distance in Euclidean nSpace

Example

If u = (1,3,-2,7) and v = (0,7,2,2), then in the

Euclidean space R4

u (1) 2 (3) 2 (2) 2 (7) 2 63 3 7

d (u, v ) (1 0) 2 (3 7) 2 (2 2) 2 (7 2) 2 58

65

Theorems

Theorem 23 (Cauchy-Schwarz Inequality in Rn)

If u = (u1 ,u2 ,…,un) and v = (v1 , v2 ,…,vn) are vectors

in Rn, then

|u · v| ≤ || u || || v ||

Theorem 24 (Properties of Length in Rn)

If u and v are vectors in Rn and k is any scalar, then

|| u || ≥ 0

|| u || = 0 if and only if u = 0

|| ku || = | k ||| u ||

|| u + v || ≤ || u || + || v ||

(Triangle inequality)

66

Theorems

Theorem 25 (Properties of Distance in Rn)

If u, v, and w are vectors in Rn and k is any scalar,

then

d(u, v) ≥ 0

d(u, v) = 0 if and only if u = v

d(u, v) = d(v, u)

d(u, v) ≤ d(u, w ) + d(w, v) (Triangle inequality)

67

Theorems

Theorem 26

If u, v, and w are vectors in Rn with the Euclidean

inner product, then

u · v = ¼ || u + v ||2–¼ || u–v ||2

68

Orthogonality(正交性)

Definition

Example

Two vectors u and v in Rn are called orthogonal if u · v = 0

In the Euclidean space R4 , the vectors

u = (-2, 3, 1, 4) and v = (1, 2, 0, -1) are orthogonal, since

u · v = (-2)(1) + (3)(2) + (1)(0) + (4)(-1) = 0

Theorem 27 (Pythagorean Theorem in Rn)

If u and v are orthogonal vectors in Rn which the Euclidean

inner product, then

|| u + v ||2 = || u ||2 + || v ||2

69

Matrix Formulae for the Dot

Product

If we use column matrix notation for the vectors

u = [u1 u2 … un]T and v = [v1 v2 … vn]T ,

or

u

v

1

u and

un

1

v

vn

then

u · v = v Tu

Au · v = u · ATv

u · Av = ATu · v

70

A Dot Product View of Matrix

Multiplication

If A = [aij] is an mr matrix and B =[bij] is an

rn matrix, then the ijth entry of AB is

ai1b1j + ai2b2j + ai3b3j + … + airbrj

which is the dot product of the ith row vector of

A and the jth column vector of B

71

A Dot Product View of Matrix

Multiplication

Thus, if the row vectors of A are r1, r2, …, rm

and the column vectors of B are c1, c2, …, cn ,

then the matrix product AB can be expressed as

r1 c1 r1 c 2 r1 c n

r c r c r c

2

n

AB 2 1 2 2

rm c1 rm c 2 rm c n

72

Functions from Rn to R

A function is a rule f that associates with each

element in a set A one and only one element in

a set B.

If f associates the element b with the element a,

then we write b = f(a) and say that b is the

image of a under f or that f(a) is the value of f

at a.

73

Functions from Rn to R

The set A is called the domain of f and the set B

is called the codomain of f.

The subset of B consisting of all possible

values for f as a varies over A is called the

range of f.

74

Examples

Formula

f (x )

Example

f ( x) x

Classification

2

f ( x, y )

f ( x, y) x y

f ( x, y , z )

f ( x, y, z ) x 2

2

2

Real-valued function

of a real variable

Function

from R to R

Real-valued function

of two real variable

Function

from R2 to

R

Real-valued function

of three real variable

Function

from R3 to

R

Real-valued function

of n real variable

Function

from Rn to

R

y2 z2

f ( x1 , x2 ,..., xn )

f ( x1 , x2 ,..., xn )

x12 x22 ... xn2

Description

75

Function from Rn to Rm

If the domain of a function f is Rn and the

codomain is Rm, then f is called a map or

transformation from Rn to Rm. We say that the

function f maps Rn into Rm, and denoted by f :

Rn Rm.

If m = n the transformation f : Rn Rm(=n) is

called an operator on Rn.

76

Function from Rn to Rm

Suppose f1, f2, …, fm are real-valued functions of

n real variables, say

w1 = f1(x1,x2,…,xn)

…

wm = fm(x1,x2,…,xn)

These m equations assign a unique point

(w1,w2,…,wm) in Rm to each point (x1,x2,…,xn) in

Rn and thus define a transformation from Rn to

77

Rm.

Function from Rn to Rm

If we denote this transformation by T: Rn Rm

then

T (x1,x2,…,xn) = (w1,w2,…,wm)

78

Linear Transformations from Rn

to Rm

A linear transformation (or a linear operator if m = n)

T: Rn Rm is defined by equations of the form

w1 a11x1 a12 x2 ... a1n xn

w2 a21x1 a22 x2 ... a2 n xn

wm am1 x1 am 2 x2 ... amn xn

or

or

w1 a11 a12

w a a

2 21 22

wm amn amn

a1n x1

a2 n x2

amn xn

w = Ax

The matrix A = [aij] is called the standard matrix for

the linear transformation T, and T is called

multiplication by A.

79

Example (Transformation and Linear

Transformation)

The equations

w1 = x1 + x2

w2 = 3x1x2

w3 = x12 – x22

define a transformation T: R2 R3.

T(x1, x2) = (x1 + x2, 3x1x2, x12 – x22)

Thus, for example, T(1,-2) = (-1,-6,-3).

80

Remarks

Notations:

If it is important to emphasize that A is the standard matrix

for T. We denote the linear transformation T: Rn Rm by

TA: Rn Rm . Thus,

TA(x) = Ax

We can also denote the standard matrix for T by the

symbol [T], or

T(x) = [T]x

81

Remarks

Remark:

We have establish a correspondence between mn

matrices and linear transformations from Rn to Rm :

To each matrix A there corresponds a linear transformation TA

(multiplication by A), and to each linear transformation T: Rn Rm,

there corresponds an mn matrix [T] (the standard matrix for T).

82

Examples

Zero Transformation from Rn to Rm

If 0 is the mn zero matrix and 0 is the zero vector in

Rn, then for every vector x in Rn

T0(x) = 0x = 0

So multiplication by zero maps every vector in Rn

into the zero vector in Rm. We call T0 the zero

transformation from Rn to Rm.

83

Examples

Identity Operator on Rn

If I is the nn identity, then for every vector in Rn

TI(x) = Ix = x

So multiplication by I maps every vector in Rn into

itself.

We call TI the identity operator on Rn.

84

Projection Operators

In general, a projection operator (or more

precisely an orthogonal projection operator)

on R2 or R3 is any operator that maps each

vector into its orthogonal projection on a

line or plane through the origin.

The projection operators are linear.

85

Projection Operators

86

Projection Operators

87

Compositions of Linear

Transformations

If TA : Rn Rk and TB : Rk Rm are linear

transformations, then for each x in Rn one can first

compute TA(x), which is a vector in Rk, and then one

can compute TB(TA(x)), which is a vector in Rm.

Thus, the application of TA followed by TB produces

a transformation from Rn to Rm.

88

Compositions of Linear

Transformations

This transformation is called the composition of TB

with TA and is denoted by TB ◦ TA. Thus

(TB ◦ TA)(x) = TB(TA(x))

The composition TB ◦ TA is linear since

(TB ◦ TA)(x) = TB(TA(x)) = B(Ax) = (BA)x

The standard matrix for TB ◦ TA is BA. That is,

TB ◦ TA = TBA

Multiplying matrices is equivalent to composing the

corresponding linear transformations in the right-toleft order of the factors.

89

Compositions of Three or More

Linear Transformations

Consider the linear transformations

T1 : Rn Rk , T2 : Rk Rl , T3 : Rl Rm

We can define the composition (T3◦T2◦T1) : Rn Rm

by

(T3◦T2◦T1)(x) : T3(T2(T1(x)))

90

Compositions of Three or More

Linear Transformations

This composition is a linear transformation and the

standard matrix for T3◦T2◦T1 is related to the standard

matrices for T1,T2, and T3 by

[T3◦T2◦T1] = [T3][T2][T1]

If the standard matrices for T1, T2, and T3 are denoted

by A, B, and C, respectively, then we also have

TC◦TB◦TA = TCBA

91

One-to-One Linear

transformations

Definition

A linear transformation T : Rn →Rm is said to be

one-to-one if T maps distinct vectors (points) in

Rn into distinct vectors (points) in Rm

Remark:

That is, for each vector w in the range of a oneto-one linear transformation T, there is exactly

one vector x such that T(x) = w.

92

Theorem (Equivalent Statements)

If A is an nn matrix and TA : Rn Rn is

multiplication by A, then the following

statements are equivalent.

A is invertible

The range of TA is Rn

TA is one-to-one

93

Examples

The rotation operator T : R2 R2 is one-toone

The standard matrix for T is

cos

[T ]

sin

sin

cos

[T] is not invertible since

cos

det

sin

sin

cos 2 sin 2 1 0

cos

94

Examples

The projection operator T : R3 R3 is not

one-to-one

The standard matrix for T is

1 0 0

[T ] 0 1 0

0 0 0

[T] is invertible since det[T] = 0

95

Inverse of a One-to-One Linear

Operator

Suppose TA : Rn Rn is a one-to-one linear

operator

The matrix A is invertible.

TA-1 : Rn Rn is itself a linear operator; it is

called the inverse of TA.

TA(TA-1(x)) = AA-1x = Ix = x and

TA-1(TA (x)) = A-1Ax = Ix = x

TA ◦ TA-1 = TAA-1 = TI and TA-1 ◦ TA = TA-1A = TI

96

Inverse of a One-to-One Linear

Operator

If w is the image of x under TA, then TA-1

maps w back into x, since

TA-1(w) = TA-1(TA (x)) = x

When a one-to-one linear operator on Rn is

written as T : Rn Rn, then the inverse of

the operator T is denoted by T-1.

Thus, by the standard matrix, we have

[T-1]=[T]-1

97

Example

Let T : R2 R2 be the operator that rotates each

vector in R2 through the angle :

cos

[T ]

sin

sin

cos

Undo the effect of T means rotate each vector in R2

through the angle -.

98

Example

This is exactly what the operator T-1 does: the

standard matrix T-1 is

cos

[T ] [T ]

sin

1

1

sin cos( )

cos sin( )

sin( )

cos( )

The only difference is that the angle is replaced by

99

Example

Show that the linear operator T : R2 R2 defined by

the equations

w1= 2x1+ x2

w2 = 3x1+ 4x2

is one-to-one, and find T-1(w1,w2).

100

Example

Solution:

w1 2 1 x1

w 3 4 x

2

2

4

5

w

1 1

[T ]

w2 3

5

T 1 ( w1 , w2 ) (

2 1

[T ]

3 4

4

5

1

1

[T ] [T ]

3

5

1

5

2

5

1

1

4

w

w2

1

w

5

5

5

1

2 w2 3

2

w1 w2

5

5

5

4

1

3

2

w1 w2 , w1 w2 )

5

5

5

5

101

Linearity Properties

Theorem 28 (Properties of Linear

Transformations)

A transformation T : Rn Rm is linear if and only if

the following relationships hold for all vectors u and

v in Rn and every scalar c.

T(u + v) = T(u) + T(v)

T(cu) = cT(u)

102

Linearity Properties

Theorem 29

If T : Rn Rm is a linear transformation, and e1,

e2, …, en are the standard basis vectors for Rn, then

the standard matrix for T is

A = [T] = [T(e1) | T(e2) | … | T(en)]

103

Example (Standard Matrix for a

Projection Operator)

Let l be the line in the xy-plane that passes through the

origin and makes an angle with the positive x-axis,

where 0 ≤ ≤ . Let T: R2 R2 be a linear operator that

maps each vector into orthogonal projection on l.

Find the standard matrix for T.

Find the orthogonal projection of

the vector x = (1,5) onto the line

through the origin that makes an

angle of = /6 with the positive

x-axis.

104

Example

The standard matrix for T can be written as

[T] = [T(e1) | T(e2)]

Consider the case 0 /2.

||T(e1)|| = cos norm of T(e1)

T (e1 ) cos cos 2

T (e1 )

sin

cos

T

(

e

)

sin

1

||T(e2)|| = sin

T (e 2 ) cos sin cos

T (e 2 )

2

sin

T

(

e

)

sin

2

2

cos sin cos

T

sin 2

sin cos

105

cos 2 sin cos

T

sin 2

sin cos

Example

Since sin (/6) = 1/2 and cos (/6) = 3 /2, it

follows from part (a) that the standard matrix

for this projection operator is

3 4

3 4

[T ]

3 4 1 4

Thus,

3 5 3

1 3 4

3 4 1 4

T

5 3 4 1 4 5 3 5

4

106

Theorem (Equivalent Statements)

If A is an nn matrix, and if TA : Rn Rn is

multiplication by A, then the following are

equivalent.

A is invertible

Ax = 0 has only the trivial solution

The reduced row-echelon form of A is In

A is expressible as a product of elementary

matrices

107

Theorem (Equivalent Statements)

Ax = b is consistent for every n1 matrix b

Ax = b has exactly one solution for every n1

matrix b

det(A) 0

The range of TA is Rn

TA is one-to-one

108

Example (Multiple Linear

Regression)(1/3)

Given n vectors u1, u2, …,un, sampling from a

population to fit the multiple regression,

y 0 1 x1 2 x2 m xm

that is,

u i Xi

Yi i 1,2,..., n where

Xi 1 xi1

Yi yi

xi 2 xim

109

Example (Multiple Linear

Regression)(2/3)

We then can name the following matrices:

X1

X 1

2 1

X X3

1

Xn

and the ith

x11 x1m

y1

y

x21 x2 m

, Y 2

xn1 xnm

yn

residual

m

^

ri yi xij j

j 1

110

Example (Multiple Linear

Regression)(3/3)

The best fit is obtained when the sum of

squared residuals is minimized. From the theory

of linear least squares, the parameter estimators

are found by solving the normal equations:

x x x y

That is,

n

m

i 1 k 1

n

^

ij ik

k

11

ij

i

X Xβ X Y

β X X X Y

T

^

^

T

T

1

T

111

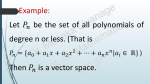

General Vector Spaces

Definition (Vector Space)

Let V be an arbitrary nonempty set of

objects on which two operations are defined:

Addition

Multiplication by scalars

If the following axioms are satisfied by all

objects u, v, w in V and all scalars k and l,

then we call V a vector space and we call

the objects in V vectors.

113

Definition (Vector Space)

1. If u and v are objects in V, then u + v is in V.

2. u + v = v + u

3. u + (v + w) = (u + v) + w

4. There is an object 0 in V, called a zero vector for V,

such that 0 + u = u + 0 = u for all u in V.

5. For each u in V, there is an object -u in V, called a

negative of u, such that u + (-u) = (-u) + u = 0.

6. If k is any scalar and u is any object in V, then ku is

in V.

114

Definition (Vector Space)

7. k (u + v) = ku + kv

8. (k + l) u = ku + lu

9. k (lu) = (kl) (u)

10. 1u = u

115

Remarks

Depending on the application, scalars may be

real numbers or complex numbers.

Vector spaces in which the scalars are complex

numbers are called complex vector spaces, and those

in which the scalars must be real are called real

vector spaces.

116

Remarks

The definition of a vector space specifies neither

the nature of the vectors nor the operations.

Any kind of object can be a vector, and the

operations of addition and scalar multiplication may

not have any relationship or similarity to the

standard vector operations on Rn.

The only requirement is that the ten vector space

axioms be satisfied.

117

Example (Rn Is a Vector Space)

The set V = Rn with the standard operations of

addition and scalar multiplication is a vector

space.

Axioms 1 and 6 follow from the definitions of

the standard operations on Rn; the remaining

axioms follow from other Theorems

The three most important special cases of Rn are

R (the real numbers), R2 (the vectors in the

plane), and R3 (the vectors in 3-space).

118

Example (22 Matrices)

Show that the set V of all 22 matrices with real

entries is a vector space if vector addition is

defined to be matrix addition and vector scalar

multiplication is defined to be matrix scalar

multiplication.

119

Example (22 Matrices)

Let

To prove Axiom 1, we must show that u + v is an

object in V; that is, we must show that u + v is a

22 matrix.

u11 u12

u

u

u

21 22

and

v11 v12

v

v

v

21 22

u11 u12 v11 v12 u11 v11 u12 v12

uv

u

u

v

v

u

v

u

v

21 22 21 22 21 21 22 22

120

Example

Similarly, Axiom 6 hold because for any real

number k we have

u11 u12 ku11 ku12

ku k

u

u

ku

ku

21 22 21

22

so that ku is a 22 matrix and consequently is an

object in V.

Axioms 2 follows from Theorem 1.4.1a since

u11 u12 v11 v12 v11 v12 u11 u12

uv

vu

u21 u22 v21 v22 v21 v22 u21 u22

121

Example

Similarly, Axiom 3 follows from part (b) of that

theorem; and Axioms 7, 8, and 9 follow from part

(h), (j), and (l), respectively.

122

Example

0 0

To prove Axiom 4, let 0

0

0

Then

0 0 u11 u12 u11 u12

0u

u

0 0 u21 u22 u21 u22

Similarly, u + 0 = u.

123

Example

u11 u12

To prove Axiom 5, let u

u

u

22

21

Then

u11 u12 u11 u12 0 0

u (u)

0

u21 u22 u21 u22 0 0

Similarly, (-u) + u = 0.

For Axiom 10, 1u = u.

124

Example (Vector Space of mn

Matrices)

The previous example is a special case of a

more general class of vector spaces.

The arguments in that example can be

adapted to show that the set V of all mn

matrices with real entries, together with the

operations matrix addition and scalar

multiplication, is a vector space.

125

Example (Vector Space of mn

Matrices)

The mn zero matrix is the zero vector 0,

and if u is the mn matrix U, then matrix –

U is the negative –u of the vector u.

We shall denote this vector space by the

symbol Mmn

126

Example (Not a Vector Space)

Let V = R2 and define addition and scalar

multiplication operations as follows: If u = (u1,

u2) and v = (v1, v2), then define

u + v = (u1 + v1, u2 + v2)

and if k is any real number, then define

k u = (k u1, 0)

130

Example (Not a Vector Space)

There are values of u for which Axiom 10 fails

to hold. For example, if u = (u1, u2) is such that

u2 ≠ 0,then

1u = 1 (u1, u2) = (1 u1, 0) = (u1, 0) ≠ u

Thus, V is not a vector space with the stated

operations.

131

Every Plane Through the Origin Is a

Vector Space

Check all the axioms!

Let V be any plane through the origin in R3. Since R3

itself is a vector space, Axioms 2, 3, 7, 8, 9, and 10 hold

for all points in R3 and consequently for all points in the

plane V.

We need only show that Axioms 1, 4, 5, and 6 are

satisfied.

132

Every Plane Through the Origin Is a

Vector Space

Check all the axioms!

Since the plane V passes through the origin, it has an

equation of the form ax + by + cz = 0. If u = (u1, u2, u3)

and v = (v1, v2, v3) are points in V, then au1 + bu2 + cu3 =

0 and av1 + bv2 + cv3 = 0. Adding these equations gives

a(u1 + v1) +b(u2 + v2) +c (u3 + v3) = 0.

Axiom 1: u + v = (u1 + v1, u2 + v2, u3 + v3); thus u + v

lies in the plane V.

Axioms 5: Multiplying au1 + bu2 + cu3 = 0 through by -1

gives a(-u1) + b(-u2) + c(-u3) = 0 ; thus, -u = (-u1, -u2, -u3)

lies in V.

133

The Zero Vector Space

Let V consist of a signle object, which we

denote by 0, and define 0 + 0 = 0 and k 0 =

0 for all scalars k.

We called this the zero vector space.

134

Theorem

Let V be a vector space, u be a vector in V,

and k a scalar; then:

0 u = 0

k 0 = 0

(-1) u = -u

If k u = 0 , then k = 0 or u = 0.

135

Subspaces

Definition

A subset W of a vector space V is called a subspace

of V if W is itself a vector space under the addition

and scalar multiplication defined on V.

Theorem 32

If W is a set of one or more vectors from a vector

space V, then W is a subspace of V if and only if the

following conditions hold:

a)If u and v are vectors in W, then u + v is in W.

136

b)If k is any scalar and u is any vector in W , then ku is in W.

Subspaces

Remark

Theorem 32 states that W is a subspace of V if and

only if W is a closed under addition (condition (a))

and closed under scalar multiplication (condition (b)).

137

Example

Let W be any plane through

the origin and let u and v be

any vectors in W.

u + v must lie in W since it is

the diagonal of the

parallelogram determined by

u and v, and k u must line in

W for any scalar k since k u

lies on a line through u.

138

Example

Thus, W is closed under

addition and scalar

multiplication, so it is a

subspace of R3.

139

Example

A line through the origin of R3 is a subspace

of R3.

Let W be a line through the origin of R3.

140

Example (Not a Subspace)

Let W be the set of all

points (x, y) in R2

such that x 0 and y

0. These are the

points in the first

quadrant.

141

Example (Not a Subspace)

The set W is not a

subspace of R2 since

it is not closed under

scalar multiplication.

For example, v = (1, 1)

lines in W, but its

negative (-1)v = -v =

(-1, -1) does not.

142

Remarks

Think about “set” and “empty set”!

Every nonzero vector space V has at least two

subspace: V itself is a subspace, and the set {0}

consisting of just the zero vector in V is a

subspace called the zero subspace.

143

Remarks

Examples of subspaces of R2 and R3:

Subspaces of R2:

{0}

Lines through the origin

R2

Subspaces of R3:

Think about “set” and “empty set”!

{0}

Lines through the origin

Planes through origin

R3

They are actually the only subspaces of R2 and

R3

144

Solution Space

Solution Space of Homogeneous Systems

If Ax = b is a system of the linear equations, then

each vector x that satisfies this equation is called a

solution vector of the system.

Theorem 33 shows that the solution vectors of a

homogeneous linear system form a vector space,

which we shall call the solution space of the system.

148

Solution Space

Theorem 33

If Ax = 0 is a homogeneous linear system of m

equations in n unknowns, then the set of

solution vectors is a subspace of Rn.

149

Example

Find the solution spaces of the linear systems.

1 - 2 3 x

0

y 0

(a)

2

4

6

3 - 6 9

z

0

1 - 2 3 x

0

y 0

(c)

-3

7

-8

4 1 2

z

0

1 - 2 3 x

0

y 0

(b)

-3

7

8

-2 4 -6

z

0

0 0 0 x

0

y 0

(d)

0

0

0

0 0 0

z

0

Each of these systems has three unknowns, so the

solutions form subspaces of R3.

Geometrically, each solution space must be a line

through the origin, a plane through the origin, the

origin only, or all of R3.

150

Example

Solution.

(a) x = 2s - 3t, y = s, z = t

x = 2y - 3z or x – 2y + 3z = 0

This is the equation of the plane through the origin with

n = (1, -2, 3) as a normal vector.

(b) x = -5t , y = -t, z =t

which are parametric equations for the line through the origin

parallel to the vector v = (-5, -1, 1).

(c) The solution is x = 0, y = 0, z = 0, so the solution space is the

origin only, that is {0}.

(d) The solution are x = r , y = s, z = t, where r, s, and t have

151

arbitrary values, so the solution space is all of R3.

Linear Combination

Definition

A vector w is a linear combination of the vectors v1,

v2,…, vr if it can be expressed in the form w = k1v1

+ k2v2 + · · · + kr vr where k1, k2, …, kr are scalars.

152

Linear Combination

Vectors in R3 are linear combinations of i, j, and k

3

Every vector v = (a, b, c) in R is expressible as a

linear combination of the standard basis vectors

i = (1, 0, 0), j = (0, 1, 0), k = (0, 0, 1)

since

v = a(1, 0, 0) + b(0, 1, 0) + c(0, 0, 1) = a i + b j + c k

153

Linear Combination and

Spanning

Theorem 34

If v1, v2, …, vr are vectors in a vector space V, then:

The set W of all linear combinations of v1,

v2, …, vr is a subspace of V.

W is the smallest subspace of V that contain v1,

v2, …, vr in the sense that every other subspace

of V that contain v1, v2, …, vr must contain W.

158

Linear Combination and

Spanning

Definition

If S = {v1, v2, …, vr} is a set of vectors in a vector

space V, then the subspace W of V containing of all

linear combination of these vectors in S is called

the space spanned by v1, v2, …, vr, and we say that

the vectors v1, v2, …, vr span W.

To indicate that W is the space spanned by the

vectors in the set S = {v1, v2, …, vr}, we write W =

span(S) or W = span{v1, v2, …, vr}.

159

Example

If v1 and v2 are non-collinear vectors in R3 with their

initial points at the origin, then span{v1, v2}, which

consists of all linear combinations k1v1 + k2v2 is the

plane determined by v1 and v2.

160

Example

Similarly, if v is a nonzero vector in R2 and R3, then

span{v}, which is the set of all scalar multiples kv, is

the linear determined by v.

161

Example

Determine whether v1 = (1, 1, 2), v2 = (1, 0, 1),

and v3 = (2, 1, 3) span the vector space R3.

162

Example

Solution

Is it possible that an arbitrary vector b = (b1, b2, b3) in R3

can be expressed as a linear combination b = k1v1 + k2v2

+ k3v3 ?

b = (b1, b2, b3) = k1(1, 1, 3) + k2(1, 0, 1) + k3(2, 1, 3) =

(k1+k2+2k3, k1+k3, 2k1+k2+3k3) or

k1 + k2 + 2k3 = b1

k1

+ k3 = b2

2k1 + k2 + 3 k3 = b3

163

Example

Solution

This system is consistent for all values of b1, b2, and b3 if

and only if the coefficient matrix

1 1 2

A 1 0 1

2 1 3

has a nonzero determinant.

However, det(A) = 0, so that v1, v2, and v3, do not span R3.

164

Theorem

If S = {v1, v2, …, vr} and S = {w1, w2, …,

wr} are two sets of vector in a vector space

V, then

span{v1, v2, …, vr} = span{w1, w2, …, wr}

if and only if each vector in S is a linear

combination of these in S and each vector

in S is a linear combination of these in S.

165

Linearly Dependent &

Independent

Definition

If S = {v1, v2, …, vr} is a nonempty set of

vector, then the vector equation k1v1 + k2v2 + …

+ krvr = 0 has at least one solution, namely k1 =

0, k2 = 0, … , kr = 0.

If this the only solution, then S is called a

linearly independent set. If there are other

solutions, then S is called a linearly dependent

set.

166

Linearly Dependent &

Independent

Examples

If v1 = (2, -1, 0, 3), v2 = (1, 2, 5, -1), and v3 = (7,

-1, 5, 8).

Then the set of vectors S = {v1, v2, v3} is

linearly dependent, since 3v1 + v2 – v3 = 0.

167

Example

Let i = (1, 0, 0), j = (0, 1, 0), and k = (0, 0, 1) in R3.

Consider the equation k1i + k2j + k3k = 0

k1(1, 0, 0) + k2(0, 1, 0) + k3(0, 0, 1) = (0, 0, 0)

(k1, k2, k3) = (0, 0, 0)

The set S = {i, j, k} is linearly independent.

Similarly the vectors

e1 = (1, 0, 0, …,0), e2 = (0, 1, 0, …, 0),

…, en = (0, 0, 0, …, 1)

form a linearly independent set in Rn.

168

Example

Remark:

To check whether a set of vectors is linear

independent or not, write down the linear

combination of the vectors and see if their

coefficients all equal zero.

169

Example

Determine whether the vectors

v1 = (1, -2, 3), v2 = (5, 6, -1), v3 = (3, 2, 1)

form a linearly dependent set or a linearly

independent set.

170

Example

Solution

Let the vector equation k1v1 + k2v2 + k3v3 = 0

k1(1, -2, 3) + k2(5, 6, -1) + k3(3, 2, 1) = (0, 0, 0)

k1 + 5k2 + 3k3 = 0

-2k1 + 6k2 + 2k3 = 0

3k1 – k2 + k3 = 0

det(A) = 0

The system has nontrivial solutions

v1,v2, and v3 form a linearly dependent set

171

Theorems

Theorem 36

A set with two or more vectors is:

Linearly dependent if and only if at least one of the

vectors in S is expressible as a linear combination of

the other vectors in S.

Linearly independent if and only if no vector in S is

expressible as a linear combination of the other

vectors in S.

172

Theorems

Theorem 37

A finite set of vectors that contains the zero

vector is linearly dependent.

A set with exactly two vectors is linearly

independently if and only if neither vector is a

scalar multiple of the other.

173

Theorems

Theorem 38

Let S = {v1, v2, …, vr} be a set of vectors in Rn.

If r > n, then S is linearly dependent.

174

Geometric Interpretation of Linear

Independence

In R2 and R3, a set of two vectors is linearly

independent if and only if the vectors do not lie on the

same line when they are placed with their initial points

at the origin.

In R3, a set of three vectors is linearly independent if

and only if the vectors do not lie in the same plane

when they are placed with their initial points at the

origin.

178

Basis

Definition

If V is any vector space and S = {v1, v2, …,vn}

is a set of vectors in V, then S is called a basis

for V if the following two conditions hold:

S is linearly independent.

S spans V.

182

Basis

Theorem 39 (Uniqueness of Basis

Representation)

If S = {v1, v2, …,vn} is a basis for a vector

space V, then every vector v in V can be

expressed in the form

v = c1v1 + c2v2 + … + cnvn

in exactly one way.

183

Coordinates Relative to a Basis

If S = {v1, v2, …, vn} is a basis for a vector space V,

and

v = c1v1 + c2v2 + ··· + cnvn

is the expression for a vector v in terms of the basis S,

then the scalars c1, c2, …, cn, are called the coordinates

of v relative to the basis S.

The vector (c1, c2, …, cn) in Rn constructed from these

coordinates is called the coordinate vector of v relative

to S; it is denoted by

(v)S = (c1, c2, …, cn)

184

Coordinates Relative to a Basis

Remark:

Coordinate vectors depend not only on the basis S but

also on the order in which the basis vectors are

written.

A change in the order of the basis vectors results in a

corresponding change of order for the entries in the

coordinate vector.

185

Example (Standard Basis for R3)

Suppose that i = (1, 0, 0), j = (0, 1, 0), and k = (0,

0, 1), then S = {i, j, k} is a linearly independent

set in R3.

This set also spans R3 since any vector v = (a, b, c)

in R3 can be written as

v = (a, b, c) = a(1, 0, 0) + b(0, 1, 0) + c(0, 0, 1) = ai

+ bj + ck

186

Example (Standard Basis for R3)

Thus, S is a basis for R3; it is called the standard

basis for R3.

Looking at the coefficients of i, j, and k, it

follows that the coordinates of v relative to

the standard basis are a, b, and c, so

(v)S = (a, b, c)

Comparing this result to v = (a, b, c), we have

v = (v)S

187

Standard Basis for Rn

If e1 = (1, 0, 0, …, 0), e2 = (0, 1, 0, …, 0), …, en

= (0, 0, 0, …, 1), then

S = {e1, e2, …, en}

is a linearly independent set in Rn.

This set also spans Rn since any vector v = (v1,

v2, …, vn) in Rn can be written as

v = v1e1 + v2e2 + … + vnen

Thus, S is a basis for Rn; it is called the standard

basis for Rn.

188

Standard Basis for Rn

The coordinates of v = (v1, v2, …, vn) relative to

the standard basis are v1, v2, …, vn, thus

(v)S = (v1, v2, …, vn)

As the previous example, we have v = (v)s, so a

vector v and its coordinate vector relative to the

standard basis for Rn are the same.

189

Example

Let v1 = (1, 2, 1), v2 = (2, 9, 0), and v3 = (3, 3, 4).

Show that the set S = {v1, v2, v3} is a basis for R3.

190

Example

Solution:

To show that the set S spans R3, we must show that an arbitrary

vector

b = (b1, b2, b3)

can be expressed as a linear combination

b = c1v1 + c2v2 + c3v3

of the vectors in S.

Let (b1, b2, b3) = c1(1, 2, 1) + c2(2, 9, 0) + c3(3, 3, 4)

c1 +2c2 +3c3 = b1

2c1+9c2 +3c3 = b2

c1

+4c3 = b3

det(A) 0

S is a basis for R3

191

Example (Representing a Vector

Using Two Bases)

Let S = {v1, v2, v3} be the basis for R3 in the

preceding example.

Find the coordinate vector of v = (5, -1, 9) with

respect to S.

Find the vector v in R3 whose coordinate vector with

respect to the basis S is (v)s = (-1, 3, 2).

192

Example (Representing a Vector

Using Two Bases)

Solution (a)

We must find scalars c1, c2, c3 such that v = c1v1 +

c2v2 + c3v3, or, in terms of components, (5, -1, 9) =

c1(1, 2, 1) + c2(2, 9, 0) + c3(3, 3, 4)

Solving this, we obtaining c1 = 1, c2 = -1, c3 = 2.

Therefore, (v)s = (1, -1, 2).

Solution (b)

Using the definition of the coordinate vector (v)s, we

obtain

v = (-1)v1 + 3v2 + 2v3 = (11, 31, 7).

193

Standard Basis for Pn

S = {1, x, x2, …, xn} is a basis for the

vector space Pn of polynomials of the form

a0 + a1x + … + anxn. The set S is called the

standard basis for Pn.

Find the coordinate vector of the

polynomial p = a0 + a1x + a2x2 relative to

the basis S = {1, x, x2} for P2 .

194

Standard Basis for Pn

Solution:

The coordinates of p = a0 + a1x + a2x2 are the

scalar coefficients of the basis vectors 1, x,

and x2, so

(p)s=(a0, a1, a2).

195

Standard Basis for Mmn

Let M1 1

0

0 1

0 0

0 0

,

M

,

M

,

M

2

3

4

0 0

1 0

0 1

0 0

The set S = {M1, M2, M3, M4} is a basis for the vector

space M22 of 2×2 matrices.

To see that S spans M22, note that an arbitrary vector

(matrix) a b can be written as

c

d

a b

1 0 0 1 0 0

0 0

a

b

c

d

c d

0 0 0 0 1 0

0 1 aM 1 bM 2 cM 3 dM 4

196

Standard Basis for Mmn

To see that S is linearly independent, assume aM1 +

bM2 + cM3 + dM4 = 0. It follows that a b 0 0

c d 0 0

Thus, a = b = c = d = 0, so S is lin. indep.

The basis S is called the standard basis for M22.

More generally, the standard basis for Mmn consists

of the mn different matrices with a single 1 and

zeros for the remaining entries.

197

Basis for the Subspace span(S)

If S = {v1, v2, …,vn} is a linearly

independent set in a vector space V, then S

is a basis for the subspace span(S)

since the set S span span(S) by definition of

span(S).

198

Finite-Dimensional

Definition

A nonzero vector V is called finite-dimensional

if it contains a finite set of vector {v1, v2, …,vn}

that forms a basis. If no such set exists, V is

called infinite-dimensional. In addition, we

shall regard the zero vector space to be finitedimensional.

199

Finite-Dimensional

Example

The vector spaces Rn, Pn, and Mmn are finitedimensional.

The vector spaces F(-, ), C(- , ), Cm(- ,

), and C∞(- , ) are infinite-dimensional.

200

Theorems

Theorem 40

Let V be a finite-dimensional vector space and

{v1, v2, …,vn} any basis.

If a set has more than n vector, then it is linearly

dependent.

If a set has fewer than n vector, then it does not span

V.

201

Theorems

Theorem 41

All bases for a finite-dimensional vector space

have the same number of vectors.

202

Dimension

Definition

The dimension of a finite-dimensional vector

space V, denoted by dim(V), is defined to be the

number of vectors in a basis for V.

We define the zero vector space to have

dimension zero.

203

Dimension

Dimensions of Some Vector Spaces:

dim(Rn) = n [The standard basis has n vectors]

dim(Pn) = n + 1 [The standard basis has n + 1

vectors]

dim(Mmn) = mn [The standard basis has mn

vectors]

204

Example

Determine a basis for and the dimension of the

solution space of the homogeneous system

2x1 + 2x2 – x3

+ x5 = 0

-x1 + x2 + 2x3 – 3x4 + x5 = 0

x1 + x2 – 2x3

– x5 = 0

x3+ x4 + x5 = 0

205

Example

Solution:

The general solution of the given system is

x1 = -s-t, x2 = s,

x3 = -t, x4 = 0, x5 = t

Therefore, the solution vectors can be written as

x1 s t 1 1

x

2 s 1 0

x3 t s 0 t 1

0

x

4

0 0

x5 t 0 1

206

Example

Which shows that the vectors

1

1

v1 0 and

0

0

1

0

v 2 1

0

1

span the solution space.

Since they are also linearly independent, {v1,

v2} is a basis , and the solution space is twodimensional.

207

Theorems

Theorem 42 (Plus/Minus Theorem)

Let S be a nonempty set of vectors in a vector space

V.

If S is a linearly independent set, and if v is a vector in V that is

outside of span(S), then the set S {v} that results by inserting

v into S is still linearly independent.

If v is a vector in S that is expressible as a linear combination

of other vectors in S, and if S – {v} denotes the set obtained by

removing v from S, then S and S – {v} span the same space;

that is, span(S) = span(S – {v})

208

Theorems

Theorem 43

If V is an n-dimensional vector space, and if S is a set

in V with exactly n vectors, then S is a basis for V if

either S spans V or S is linearly independent.

209

Example

Show that v1 = (-3, 7) and v2 = (5, 5) form a basis for

R2 by inspection.

Solution:

Neither vector is a scalar multiple of the other

The two vectors form a linear independent set in

the 2-D space R2

The two vectors form a basis by Theorem 5.4.5.

210

Example

Show that v1 = (2, 0, 1) , v2 = (4, 0, 7), v3 = (-1, 1, 4)

form a basis for R3 by inspection.

Solution:

The vectors v1 and v2 form a linearly independent set

in the xz-plane.

The vector v3 is outside of the xz-plane, so the set {v1,

v2 , v3} is also linearly independent.

3

Since R is three-dimensional, Theorem 5.4.5 implies

that {v1, v2 , v3} is a basis for R3.

211

Theorems

Theorem 44

Let S be a finite set of vectors in a finitedimensional vector space V.

If S spans V but is not a basis for V, then S can be

reduced to a basis for V by removing appropriate

vectors from S.

If S is a linearly independent set that is not already a

basis for V, then S can be enlarged to a basis for V

by inserting appropriate vectors into S.

212

Theorems

Theorem 45

If W is a subspace of a finite-dimensional

vector space V, then dim(W) dim(V).

If dim(W) = dim(V), then W = V.

213

Definition

For an mn matrix

the vectors

a11 a12 a1n

a

a

a

21

22

2

n

A

am1 am 2 amn

r1 [a11 a12 a1n ]

r2 [a21 a22 a2 n ]

rm [am1

am 2 amn ]

in Rn formed form the rows of A are called the row

vectors of A, and the vectors

a11

a12

a1n

a

a

a

21

22

c1 , c 2 ,, c n 2 n

am1

am 2

amn

in Rm formed from the columns of A are called the

column vectors of A.

214

Example

2 1 0

Let A

3

1

4

The row vectors of A are

r1 = [2 1 0] and r2 = [3 -1 4]

and the column vectors of A are

2

1

0

c1 , c2 , and c3

3

1

4

215

Row Space and Column Space

Definition

If A is an mn matrix, then the subspace of Rn

spanned by the row vectors of A is called the row

space of A, and the subspace of Rm spanned by the

column vectors is called the column space of A.

The solution space of the homogeneous system of

equation Ax = 0, which is a subspace of Rn, is called

the nullspace of A.

Amn

a11 a12 a1n

a

a

a

21

22

2

n

a

a

a

m2

mn

m1

a11

a12

a1n

a

a

a

21

22

c1 , c 2 ,, c n 2 n

a

a

m1

m2

amn

216

Row Space and Column Space

Theorem 46

A system of linear equations Ax = b is consistent if

and only if b is in the column space of A.

217

Example

Let Ax = b be the linear system

1 3 2 x1 1

1 2 3 x 9

2

2 1 2 x3 3

Show that b is in the column space of A, and

express b as a linear combination of the column

vectors of A.

218

Example

Solution:

Solving the system by Gaussian elimination yields

x1 = 2, x2 = -1, x3 = 3

Since the system is consistent, b is in the column

space of A.

Moreover, it follows that

1 3 2 1

2 1 2 3 3 9

2 1 2 3

219

General and Particular Solutions

Theorem 47

If x0 denotes any single solution of a consistent

linear system Ax = b, and if v1, v2, …, vk form a

basis for the nullspace of A, (that is, the

solution space of the homogeneous system Ax =

0), then every solution of Ax = b can be

expressed in the form

x = x0 + c1v1 + c2v2 + · · · + ckvk

Conversely, for all choices of scalars c1, c2, …,

ck the vector x in this formula is a solution of

Ax = b.

220

General and Particular Solutions

Remark

The vector x0 is called a particular solution of

Ax = b.

The expression x0 + c1v1 + · · · + ckvk is called

the general solution of Ax = b, the expression

c1v1 + · · · + ckvk is called the general solution

of Ax = 0.

The general solution of Ax = b is the sum of

any particular solution of Ax = b and the

general solution of Ax = 0.

221

Example (General Solution of Ax

= b)

The solution to the nonhomogeneous

system

x1 + 3x2 – 2x3

+ 2x5

=0

2x1 + 6x2 – 5x3 – 2x4 + 4x5 – 3x6 = -1

5x3 + 10x4

+ 15x6 = 5

2x1 + 5x2

+ 8x4 + 4x5 + 18x6 = 6

is

x1 = -3r - 4s - 2t, x2 = r,

x3 = -2s, x4 = s,

x5 = t, x6 = 1/3

222

Example (General Solution of Ax

= b)

The result can be written in vector form as

x1 3r 4 s 2t 0 3 4 2

x

0 1 0 0

r

2

x3

0 0 2 0

2s

r s t

x

s

4

0 0 1 0

x5

0 0 0 1

t

1/ 3

1 / 3 0 0 0

x6

x0

x

which is the general solution.

The vector x0 is a particular solution of

nonhomogeneous system, and the linear

combination x is the general solution of the

homogeneous system.

223

Example

Find a basis for the nullspace of

2 2 1 0 1

1 1 2 3 1

A

1 1 2 0 1

0

0

1

1

1

224

Example

Solution

The nullspace of A is the solution space of the homogeneous system

2x1 + 2x2 – x3

+ x5 = 0

-x1 – x2 – 2 x3 – 3x4 + x5 = 0

x1 + x2 – 2 x3

– x5 = 0

x3 + x 4 + x 5 = 0

In Example 10 of Section 5.4 we showed that the vectors

1

1

1

0

v1 0 and v 2 1

0

0

0

1

form a basis for the nullspace.

225

Theorems

Theorem 48

Elementary row operations do not change both

the nullspace and row space of a matrix.

Theorem 49

If A and B are row equivalent matrices, then:

A given set of column vectors of A is linearly

independent if and only if the corresponding column

vectors of B are linearly independent.

A given set of column vectors of A forms a basis for

the column space of A if and only if the

corresponding column vectors of B form a basis for

the column space of B.

226

Theorems

Theorem 50

If a matrix R is in row echelon form, then the

row vectors with the leading 1’s (i.e., the

nonzero row vectors) form a basis for the row

space of R, and the column vectors with the

leading 1’s of the row vectors form a basis for

the column space of R.

227

Example

Find bases for the row and column spaces of

1 3 4 2 5 4

2 6 9 1 8 2

A

2 6 9 1 9 7

1 3 4 2 5 4

229

Example

Solution:

Reducing A to row-echelon form we obtain

1 3 4 2 5 4

2 6 9 1 8 2

A

2 6 9 1 9 7

1 3 4 2 5 4

Note about the

correspondence!

1 3

0 0

R

0 0

0 0

4 2 5 4

1 3 2 6

0 0 1 5

0 0 0 0

By Theorem 5.5.6 and 5.5.5(b), the row and column

spaces are

r1 = [1 -3 4 -2 5 4]

r2 = [0 0 1 3 -2 -6]

r3 = [0 0 0 0 1 5]

and

1

4

5

2

9

8

c1 , c3 , c5

2

9

9

1

4

5

230

Example (Basis for a Vector Space

Using Row Operations )

Find a basis for the space spanned by the vectors

v1= (1, -2, 0, 0, 3), v2 = (2, -5, -3, -2, 6),

v3 = (0, 5, 15, 10, 0), v4 = (2, 6, 18, 8, 6).

231

Example (Basis for a Vector Space

Using Row Operations )

Solution: (Write down the vectors as row vectors

first!)

1 2 0 0

2 5 3 2

0 5 15 10

2 6 18 8

3

6

0

6

1 2

0 1

0 0

0 0

0

3

1

0

0

2

1

0

3

0

0

0

The nonzero row vectors in this matrix are

w1= (1, -2, 0, 0, 3), w2 = (0, 1, 3, 2, 0), w3 = (0, 0, 1, 1, 0)

These vectors form a basis for the row space and

consequently form a basis for the subspace of R5

spanned by v1, v2, v3, and v4.

232

Remarks

Keeping in mind that A and R may have different

column spaces, we cannot find a basis for the

column space of A directly from the column

vectors of R.

However, it follows from Theorem 5.5.5b that if

we can find a set of column vectors of R that

forms a basis for the column space of R, then the

corresponding column vectors of A will form a

basis for the column space of A.

233

Remarks

In the previous example, the basis vectors

obtained for the column space of A consisted of

column vectors of A, but the basis vectors

obtained for the row space of A were not all

vectors of A.

Transpose of the matrix can be used to solve this

problem.

234

Example (Basis for the Row Space

of a Matrix )

Find a basis for the row space of

1 2 0 0

2 5 3 2

A

0 5 15 10

2 6 18 8

3

6

0

6

consisting entirely of row vectors from A.

235

Example (Basis for the Row Space

of a Matrix )

Solution:

1 2 0 2

2 5 5 6

T

A 0 3 15 18

0

2

10

8

3 6 0 6

1

0

0

0

0

2 0

1 5

0 0

0 0

0 0

2

10

1

0

0

The column space of AT are

1

2

2

2

5

6

c1 0 , c 2 3 , and c4 18

0

2

8

3

6

6

Thus, the row space of A are

r1 = [1 -2 0 0 3]r2 = [2 -5 -3 -2 6]r3 = [2 6 18 8 6]

236

Example (Basis and Linear

Combinations )

(a) Find a subset of the vectors v1 = (1, -2, 0, 3), v2

= (2, -5, -3, 6), v3 = (0, 1, 3, 0), v4 = (2, -1, 4, -7),

v5 = (5, -8, 1, 2) that forms a basis for the space

spanned by these vectors.

(b) Express each vector not in the basis as a linear

combination of the basis vectors.

237

Example (Basis and Linear

Combinations )

Solution (a):

1

2

0

3

v1

2

5

3

6

0

1

3

0

2

1

4

7

v2

v3 v 4

5

8

1

2

v5

1

0

0

0

1

1

1

0

w1 w 2 w 3 w 4 w 5

0 2

1 1

0 0

0 0

0

0

1

0

Thus, {v1, v2, v4} is a basis for the column

space of the matrix.

238

Example

Solution (b):

We can express w3 as a linear combination of

w1 and w2, express w5 as a linear combination

of w1, w2, and w4 (Why?). By inspection, these

linear combination are

w3 = 2w1 – w2

w5 = w1 + w2 + w4

239

Example

We call these the dependency equations. The

corresponding relationships in the original

vectors are

v3 = 2v1 – v2

v5 = v1 + v2 + v4

240

Four Fundamental Matrix Spaces

Consider a matrix A and its transpose AT together, then

there are six vector spaces of interest:

row space of A, row space of AT

column space of A, column space of AT

null space of A, null space of AT

However, the fundamental matrix spaces associated

with A are

row space of A, column space of A

null space of A, null space of AT

241

Four Fundamental Matrix Spaces

If A is an mn matrix, then the row space of A and

nullspace of A are subspaces of Rn and the column

space of A and the nullspace of AT are subspace of Rm

What is the relationship between the dimensions of

these four vector spaces?

242

Dimension and Rank(秩)

Theorem 51

If A is any matrix, then the row space and column

space of A have the same dimension.

Definition

The common dimension of the row and column

space of a matrix A is called the rank of A and is

denoted by rank(A); the dimension of the nullspace

of a is called the nullity(零度) of A and is denoted

by nullity(A).

243

Example (Rank and Nullity)

Find the rank and nullity of the matrix

1 2 0 4 5 3

3 7 2 0 1 4

A

2 5 2 4 6 1

4 9 2 4 4 7

Solution:

The reduced row-echelon form of A is

1

0

0

0

0 4 28 37 13

1 2 12 16 5

0 0

0

0

0

0 0

0

0

0

Since there are two nonzero rows, the row space and

column space are both two-dimensional, so rank(A) = 2. 244

Example (Rank and Nullity)

The corresponding system of equations will be

x1 – 4x3 – 28x4 – 37x5 + 13x6 = 0

x2 – 2x3 – 12x4 – 16 x5+ 5 x6 = 0

245

Example (Rank and Nullity)

It follows that the general solution of the

system is

x1 = 4r + 28s + 37t – 13u,

x2 = 2r + 12s + 16t – 5u,

x3 = r, x4 = s, x5 = t, x6 = u

or x 4 28 37 13

1

x

2 12 16

5

2

x3

1 0 0

0

r s t u

x

0

1

0

0

4

x5

0 0 1

0

0 0 0

1

x6

Thus, nullity(A) = 4.

246

Theorems

Theorem 52

If A is any matrix, then rank(A) = rank(AT).

Theorem 53 (Dimension Theorem for Matrices)

If A is a matrix with n columns, then rank(A) +