here - carrot!!!

... You can determine omega for the whole dataset; however, usually not all sites in a sequence are under selection all the time. PAML (and other programs) allow to either determine omega for each site over the whole tree, ...

... You can determine omega for the whole dataset; however, usually not all sites in a sequence are under selection all the time. PAML (and other programs) allow to either determine omega for each site over the whole tree, ...

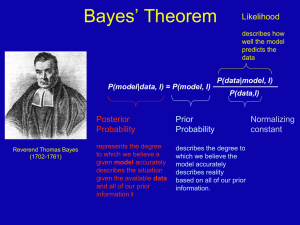

Consider Exercise 3.52 We define two events as follows: H = the

... We now calculate the following conditional probabilities. The probability of F given H, denoted by P(F | H), is _____ . We could use the conditional probability formula on page 138 of our text. Note that P(F | ࡴ ) = ______ Comparing P(F), P(F | H) and P(F | ࡴ ) we note that the occurrence or nonoc ...

... We now calculate the following conditional probabilities. The probability of F given H, denoted by P(F | H), is _____ . We could use the conditional probability formula on page 138 of our text. Note that P(F | ࡴ ) = ______ Comparing P(F), P(F | H) and P(F | ࡴ ) we note that the occurrence or nonoc ...

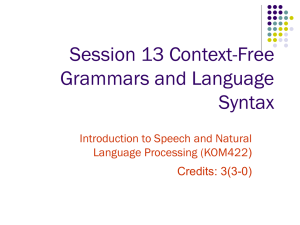

Konsep dalam Teori Otomata dan Pembuktian Formal

... Clearly this NP is really about flights. That’s the central criticial noun in this NP. Let’s call that the head. We can dissect this kind of NP into the stuff that can come before the head, and the stuff that can come after it. ...

... Clearly this NP is really about flights. That’s the central criticial noun in this NP. Let’s call that the head. We can dissect this kind of NP into the stuff that can come before the head, and the stuff that can come after it. ...

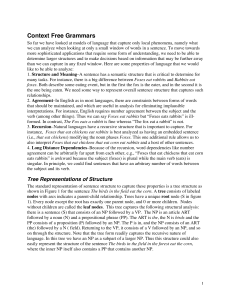

Context Free Grammar

... • The parser often builds valid trees for a portion of the input and then discards them during backtracking because they fail to cover all of the input. Later, the parser has to rebuild the same trees again in the search. • In the table is shown how many times each constituent of the example sentenc ...

... • The parser often builds valid trees for a portion of the input and then discards them during backtracking because they fail to cover all of the input. Later, the parser has to rebuild the same trees again in the search. • In the table is shown how many times each constituent of the example sentenc ...

Context Free Grammars

... noun (.1 - the letter “i”), hate is a verb, annoying is a verb (.7) or ADJ (.3), and neighbors is a noun. We first add all the lexical probabilities, and then use the rules to build any other constituents of length 1 as shown in Figure 6. For example, at position 1 we have an NP constructed from the ...

... noun (.1 - the letter “i”), hate is a verb, annoying is a verb (.7) or ADJ (.3), and neighbors is a noun. We first add all the lexical probabilities, and then use the rules to build any other constituents of length 1 as shown in Figure 6. For example, at position 1 we have an NP constructed from the ...

LSA.303 Introduction to Computational Linguistics

... Deals well with free word order languages where the constituent structure is quite fluid Parsing is much faster than CFG-bases parsers Dependency structure often captures the syntactic relations needed by later applications CFG-based approaches often extract this same information from trees ...

... Deals well with free word order languages where the constituent structure is quite fluid Parsing is much faster than CFG-bases parsers Dependency structure often captures the syntactic relations needed by later applications CFG-based approaches often extract this same information from trees ...

Introduction to Computational Natural Language

... and parameters framework are learnable because each class is of finite size. In fact a simple Blind Guess Learner is guaranteed to succeed in the long run for any finite class of grammars. But, is this not-so-blind-learner guaranteed (it has a parse test) to converge on all possible targets in a P&P ...

... and parameters framework are learnable because each class is of finite size. In fact a simple Blind Guess Learner is guaranteed to succeed in the long run for any finite class of grammars. But, is this not-so-blind-learner guaranteed (it has a parse test) to converge on all possible targets in a P&P ...

Generative grammar

... what we say or write derived structures which occur after transformation of deep structure statements ...

... what we say or write derived structures which occur after transformation of deep structure statements ...

General Probability, I: Rules of probability

... Notes and tips • Draw Venn diagrams: Venn diagrams help you picture what is going on and deriving the appropriate probabilities. • Probabilities behave like areas: A very useful rule of thumb is that probabilities behave like areas in the associated Venn diagrams. All of the above formulas are consi ...

... Notes and tips • Draw Venn diagrams: Venn diagrams help you picture what is going on and deriving the appropriate probabilities. • Probabilities behave like areas: A very useful rule of thumb is that probabilities behave like areas in the associated Venn diagrams. All of the above formulas are consi ...

Art N pronoun proper noun

... which would be represented differently in the deep structure. Also, Phrases can be also ambiguous: The hatred of the killers 1) The killers hated someone 2) Someone hated the killers So, the grammar must be capable of showing how a single underling, abstract representation can become different surfa ...

... which would be represented differently in the deep structure. Also, Phrases can be also ambiguous: The hatred of the killers 1) The killers hated someone 2) Someone hated the killers So, the grammar must be capable of showing how a single underling, abstract representation can become different surfa ...

probabilistic lexicalized context-free grammars

... understanding task. Probabilistic parsing is a key contribution to disambiguation. Choose the most probable parse as the answer, so simple. However, additionally, using the help of subcategorization and lexical dependency information and so of probabilistic lexicalized context-free grammars (PLCFG) ...

... understanding task. Probabilistic parsing is a key contribution to disambiguation. Choose the most probable parse as the answer, so simple. However, additionally, using the help of subcategorization and lexical dependency information and so of probabilistic lexicalized context-free grammars (PLCFG) ...

bioinfo5a

... sequence Q = q1,…,qT that has the highest conditional probability given O. In other words, we want to find a Q that makes P[Q | O] maximal. There may be many Q’s that make P[Q | O] maximal. We give an algorithm to find one of them. ...

... sequence Q = q1,…,qT that has the highest conditional probability given O. In other words, we want to find a Q that makes P[Q | O] maximal. There may be many Q’s that make P[Q | O] maximal. We give an algorithm to find one of them. ...

Some Probability Theory and Computational models

... • Context Free Grammars are a more natural model for Natural Language • Syntax rules are very easy to formulate using CFGs • Provably more expressive than Finite State Machines – E.g. Can check for balanced parentheses ...

... • Context Free Grammars are a more natural model for Natural Language • Syntax rules are very easy to formulate using CFGs • Provably more expressive than Finite State Machines – E.g. Can check for balanced parentheses ...

LARG-20010510

... • In practice languages are not logical structures. • Often said sentences are not precisely grammatical. The solution of expanding the grammar leads to explosion of grammar rules. • A large grammar will lead to many parses of the same sentences. Clearly, some parses are more accurate than others. S ...

... • In practice languages are not logical structures. • Often said sentences are not precisely grammatical. The solution of expanding the grammar leads to explosion of grammar rules. • A large grammar will lead to many parses of the same sentences. Clearly, some parses are more accurate than others. S ...