Lecture02

... and you likely recognize the right-hand side as the usual definition of the expected value of a discrete random variable. We can extend Eq. 1.8 to mixed random variables by just breaking them into an ordinary pdf and the sum of a bunch of Dirac functions. (There’s a little more to the story than thi ...

... and you likely recognize the right-hand side as the usual definition of the expected value of a discrete random variable. We can extend Eq. 1.8 to mixed random variables by just breaking them into an ordinary pdf and the sum of a bunch of Dirac functions. (There’s a little more to the story than thi ...

PSYCHOLOGICAL STATISTICS I Semester UNIVERSITY OF CALICUT B.Sc. COUNSELLING PSYCHOLOGY

... Under this method, random selection is made of primary, intermediate and final units from a given population. There are several stages in which the sampling process is carried out. Initially random samples of first stage units are selected. As the second step, from the first stage units, second stag ...

... Under this method, random selection is made of primary, intermediate and final units from a given population. There are several stages in which the sampling process is carried out. Initially random samples of first stage units are selected. As the second step, from the first stage units, second stag ...

MATH 1031 Probability Unit - Math User Home Pages

... Pictures also help as well. In addition to the tree diagram mentioned in Section 10.1 of the text, the idea of slots comes in particularly useful in probability if you must order some group of objects. To use slots, simply draw dashes – enough dashes for the number of objects or stages that you have ...

... Pictures also help as well. In addition to the tree diagram mentioned in Section 10.1 of the text, the idea of slots comes in particularly useful in probability if you must order some group of objects. To use slots, simply draw dashes – enough dashes for the number of objects or stages that you have ...

3 - Rice University

... Recall that while discussing the method of intersection of events we mentioned that for the rule to apply the events should be independent The method of intersection of events stated that ...

... Recall that while discussing the method of intersection of events we mentioned that for the rule to apply the events should be independent The method of intersection of events stated that ...

Probability and Stochastic Processes

... industrial setting, is often heavily dependent on computations to support and inform conclusions. Consider, for example, computer network analysis, models based on large data sets, and actuarial analysis. Whatever a probability student's potential future career, she or he will be better served by ha ...

... industrial setting, is often heavily dependent on computations to support and inform conclusions. Consider, for example, computer network analysis, models based on large data sets, and actuarial analysis. Whatever a probability student's potential future career, she or he will be better served by ha ...

HYPOTHESIS TESTING 1. Introduction 1.1. Hypothesis testing. Let {f

... 1.1. Hypothesis testing. Let {fθ }θ∈Θ be a family of pdfs. Let θ ∈ Θ, where θ is unknown. Let ΘN ⊂ Θ. A statement of the form ‘θ ∈ ΘN ’ is a hypothesis. Let X = (X1 , . . . , Xn ) be a random sample from fθ . A hypothesis test consists a hypothesis called the null hypothesis, of the form θ ∈ ΘN , an ...

... 1.1. Hypothesis testing. Let {fθ }θ∈Θ be a family of pdfs. Let θ ∈ Θ, where θ is unknown. Let ΘN ⊂ Θ. A statement of the form ‘θ ∈ ΘN ’ is a hypothesis. Let X = (X1 , . . . , Xn ) be a random sample from fθ . A hypothesis test consists a hypothesis called the null hypothesis, of the form θ ∈ ΘN , an ...

Supplement to Chapter 2 - Probability and Statistics

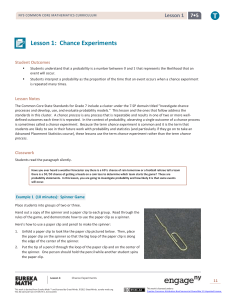

... the outcome is uncertain prior to taking the observations. If an experiment consists of outcomes generated by a sequential process such a flipping a coin three times and recording whether it is heads or tails can be represented as a tree diagram which is illustrated figure 2S-1. On each flip either ...

... the outcome is uncertain prior to taking the observations. If an experiment consists of outcomes generated by a sequential process such a flipping a coin three times and recording whether it is heads or tails can be represented as a tree diagram which is illustrated figure 2S-1. On each flip either ...

Probability

... is an innate disposition or propensity for things to happen. Long run propensities seem to coincide with the frequentist definition of probability although it is not clear what individual propensities are, or whether they obey the probability calculus. ...

... is an innate disposition or propensity for things to happen. Long run propensities seem to coincide with the frequentist definition of probability although it is not clear what individual propensities are, or whether they obey the probability calculus. ...

Tools-Soundness-and-Completeness

... If we have proved (φ & ψ) on some line, then on any future line we may write down φ, and on any future line we may write down ψ. The result depends on everything (φ & ψ) depended on. ...

... If we have proved (φ & ψ) on some line, then on any future line we may write down φ, and on any future line we may write down ψ. The result depends on everything (φ & ψ) depended on. ...

Reduction(4).pdf

... So far we have introduced a purely metaphysical notion of an experiment situation in which the θ values may be thought of as stochastic hidden variables, just like those considered in the hiddenvariable interpretations of quantum mechanics. To introduce an epistemological element into our framework ...

... So far we have introduced a purely metaphysical notion of an experiment situation in which the θ values may be thought of as stochastic hidden variables, just like those considered in the hiddenvariable interpretations of quantum mechanics. To introduce an epistemological element into our framework ...

Descriptive Assessment of Jeffrey’s Rule Jiaying Zhao () Daniel Osherson ()

... (3) is known as “Jeffrey’s rule.” It shows how change in the probability of B is propagated to G, without experience directly affecting G. It is straightforward to generalize (3) to finer partitions, in place of the binary partition B, B. Assuming invariance, it is easy to show that (3) defines a ge ...

... (3) is known as “Jeffrey’s rule.” It shows how change in the probability of B is propagated to G, without experience directly affecting G. It is straightforward to generalize (3) to finer partitions, in place of the binary partition B, B. Assuming invariance, it is easy to show that (3) defines a ge ...

A NOTE ON THE DISTRIBUTIONS OF THE MAXI-

... When ξn are standard normal, i.e., L = N (0, 1), we deal in (1) with an arbitrary Gaussian random process. As is well-known, for the distribution function F of M , x0 = inf{x ∈ R : F (x) > 0} may be finite, and then it is sometimes called a take-off point of the maximum of the Gaussian process. More ...

... When ξn are standard normal, i.e., L = N (0, 1), we deal in (1) with an arbitrary Gaussian random process. As is well-known, for the distribution function F of M , x0 = inf{x ∈ R : F (x) > 0} may be finite, and then it is sometimes called a take-off point of the maximum of the Gaussian process. More ...

Probability interpretations

The word probability has been used in a variety of ways since it was first applied to the mathematical study of games of chance. Does probability measure the real, physical tendency of something to occur or is it a measure of how strongly one believes it will occur, or does it draw on both these elements? In answering such questions, mathematicians interpret the probability values of probability theory.There are two broad categories of probability interpretations which can be called ""physical"" and ""evidential"" probabilities. Physical probabilities, which are also called objective or frequency probabilities, are associated with random physical systems such as roulette wheels, rolling dice and radioactive atoms. In such systems, a given type of event (such as the dice yielding a six) tends to occur at a persistent rate, or ""relative frequency"", in a long run of trials. Physical probabilities either explain, or are invoked to explain, these stable frequencies. Thus talking about physical probability makes sense only when dealing with well defined random experiments. The two main kinds of theory of physical probability are frequentist accounts (such as those of Venn, Reichenbach and von Mises) and propensity accounts (such as those of Popper, Miller, Giere and Fetzer).Evidential probability, also called Bayesian probability (or subjectivist probability), can be assigned to any statement whatsoever, even when no random process is involved, as a way to represent its subjective plausibility, or the degree to which the statement is supported by the available evidence. On most accounts, evidential probabilities are considered to be degrees of belief, defined in terms of dispositions to gamble at certain odds. The four main evidential interpretations are the classical (e.g. Laplace's) interpretation, the subjective interpretation (de Finetti and Savage), the epistemic or inductive interpretation (Ramsey, Cox) and the logical interpretation (Keynes and Carnap).Some interpretations of probability are associated with approaches to statistical inference, including theories of estimation and hypothesis testing. The physical interpretation, for example, is taken by followers of ""frequentist"" statistical methods, such as R. A. Fisher, Jerzy Neyman and Egon Pearson. Statisticians of the opposing Bayesian school typically accept the existence and importance of physical probabilities, but also consider the calculation of evidential probabilities to be both valid and necessary in statistics. This article, however, focuses on the interpretations of probability rather than theories of statistical inference.The terminology of this topic is rather confusing, in part because probabilities are studied within a variety of academic fields. The word ""frequentist"" is especially tricky. To philosophers it refers to a particular theory of physical probability, one that has more or less been abandoned. To scientists, on the other hand, ""frequentist probability"" is just another name for physical (or objective) probability. Those who promote Bayesian inference view ""frequentist statistics"" as an approach to statistical inference that recognises only physical probabilities. Also the word ""objective"", as applied to probability, sometimes means exactly what ""physical"" means here, but is also used of evidential probabilities that are fixed by rational constraints, such as logical and epistemic probabilities.It is unanimously agreed that statistics depends somehow on probability. But, as to what probability is and how it is connected with statistics, there has seldom been such complete disagreement and breakdown of communication since the Tower of Babel. Doubtless, much of the disagreement is merely terminological and would disappear under sufficiently sharp analysis.