Neural Nets: introduction

... know how its done. – Even if we had a good idea about how to do it, the program might be horrendously complicated. • Instead of writing a program by hand, we collect lots of examples that specify the correct output for a given input. • A machine learning algorithm then takes these examples and produ ...

... know how its done. – Even if we had a good idea about how to do it, the program might be horrendously complicated. • Instead of writing a program by hand, we collect lots of examples that specify the correct output for a given input. • A machine learning algorithm then takes these examples and produ ...

feedback-poster

... The states of Relu and max pooling dominate everything. But for most of popular convolutional neural networks, the states of Relu and max pooling are determined only by the input . ...

... The states of Relu and max pooling dominate everything. But for most of popular convolutional neural networks, the states of Relu and max pooling are determined only by the input . ...

notes as

... know how its done. – Even if we had a good idea about how to do it, the program might be horrendously complicated. • Instead of writing a program by hand, we collect lots of examples that specify the correct output for a given input. • A machine learning algorithm then takes these examples and produ ...

... know how its done. – Even if we had a good idea about how to do it, the program might be horrendously complicated. • Instead of writing a program by hand, we collect lots of examples that specify the correct output for a given input. • A machine learning algorithm then takes these examples and produ ...

overview imagenet neural networks alexnet meta-network

... Previous winner of ImageNet, the large, deep convolutional neural network trained by Alex Krizhevsky et al to classify the 1.2 million high-resolution images in the ImageNet LSVRC-2012 contest into the 1000 different classes. AlexNet was constructed similarly to L E N ET, but was expanded in every d ...

... Previous winner of ImageNet, the large, deep convolutional neural network trained by Alex Krizhevsky et al to classify the 1.2 million high-resolution images in the ImageNet LSVRC-2012 contest into the 1000 different classes. AlexNet was constructed similarly to L E N ET, but was expanded in every d ...

Neural network

... • In the training mode, the neuron can be trained to fire (or not), for particular input patterns. • In the using mode, when a taught input pattern is detected at the input, its associated output becomes the current output. If the input pattern does not belong in the taught list of input patterns, t ...

... • In the training mode, the neuron can be trained to fire (or not), for particular input patterns. • In the using mode, when a taught input pattern is detected at the input, its associated output becomes the current output. If the input pattern does not belong in the taught list of input patterns, t ...

PPT

... and Chagall with 95% accuracy (when presented with pictures they had been trained on) Discrimination still 85% successful for previously unseen paintings of the artists Pigeons do not simply memorise the pictures They can extract and recognise patterns (the ‘style’) They generalise from the ...

... and Chagall with 95% accuracy (when presented with pictures they had been trained on) Discrimination still 85% successful for previously unseen paintings of the artists Pigeons do not simply memorise the pictures They can extract and recognise patterns (the ‘style’) They generalise from the ...

Neural Networks vs. Traditional Statistics in Predicting Case Worker

... • TRANSFER FUNCTIONS THAT NEURAL NETWORKS USE ARE STATISTICAL • THE PROCESS OF ADJUSTING WEIGHTS (passing data through the network) TO ACHIEVE A BETTER FIT TO THE DATA USING WELL-DEFINED ...

... • TRANSFER FUNCTIONS THAT NEURAL NETWORKS USE ARE STATISTICAL • THE PROCESS OF ADJUSTING WEIGHTS (passing data through the network) TO ACHIEVE A BETTER FIT TO THE DATA USING WELL-DEFINED ...

lecture notes - The College of Saint Rose

... A perceptron has initial (often random) weights typically in the range [-0.5, 0.5] Apply an established training dataset Calculate the error as ...

... A perceptron has initial (often random) weights typically in the range [-0.5, 0.5] Apply an established training dataset Calculate the error as ...

NNs - Unit information

... ◦ Although neurons themselves are complicated, they don't exhibit complex behaviour on their own. This is the key feature that makes it a viable computational intelligence approach. ...

... ◦ Although neurons themselves are complicated, they don't exhibit complex behaviour on their own. This is the key feature that makes it a viable computational intelligence approach. ...

GameAI_NeuralNetworks

... All data values are within range from 0.0-1.0, normalized Use 0.1 for inactive (false) output and 0.9 for active (true) output – impractical to achieve 0 or 1 for NN output, so use reasonable target value ...

... All data values are within range from 0.0-1.0, normalized Use 0.1 for inactive (false) output and 0.9 for active (true) output – impractical to achieve 0 or 1 for NN output, so use reasonable target value ...

myelin sheath

... By means of the Hebbian Learning Rule, a circuit of continuously firing neurons could be learned by the network. ...

... By means of the Hebbian Learning Rule, a circuit of continuously firing neurons could be learned by the network. ...

Document

... By means of the Hebbian Learning Rule, a circuit of continuously firing neurons could be learned by the network. ...

... By means of the Hebbian Learning Rule, a circuit of continuously firing neurons could be learned by the network. ...

Katie Newhall Synchrony in stochastic pulse-coupled neuronal network models

... Synchrony in stochastic pulse-coupled neuronal network models Many pulse-coupled dynamical systems possess synchronous attracting states. Even stochastically driven model networks of Integrate and Fire neurons demonstrate synchrony over a large range of parameters. We study the interplay between ...

... Synchrony in stochastic pulse-coupled neuronal network models Many pulse-coupled dynamical systems possess synchronous attracting states. Even stochastically driven model networks of Integrate and Fire neurons demonstrate synchrony over a large range of parameters. We study the interplay between ...

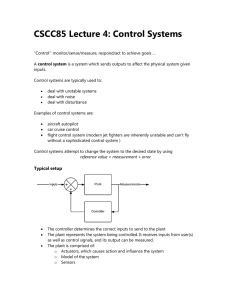

CSCC85 Lecture 4: Control Systems

... multiple inputs using different weights to approximate some unknown function y = g(x). ...

... multiple inputs using different weights to approximate some unknown function y = g(x). ...

Supervised learning

... couples is the learning base. The learning aims is to find for each weight wij a value in order to obtain a small difference between the answer to the input vector and the output vector. ...

... couples is the learning base. The learning aims is to find for each weight wij a value in order to obtain a small difference between the answer to the input vector and the output vector. ...

Slide 1

... Non-linear classification problem using NN Step 4: Now we are ready for the net synthesis ...

... Non-linear classification problem using NN Step 4: Now we are ready for the net synthesis ...

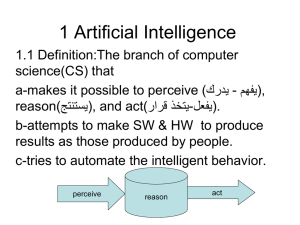

Artificial Intelligence

... 1.1 Definition:The branch of computer science(CS) that a-makes it possible to perceive ( يدرك- )يفهم, reason()يستنتج, and act(يتخذ قرار-)يفعل. b-attempts to make SW & HW to produce results as those produced by people. c-tries to automate the intelligent behavior. perceive ...

... 1.1 Definition:The branch of computer science(CS) that a-makes it possible to perceive ( يدرك- )يفهم, reason()يستنتج, and act(يتخذ قرار-)يفعل. b-attempts to make SW & HW to produce results as those produced by people. c-tries to automate the intelligent behavior. perceive ...

First-Pass Attachment Disambiguation with Recursive Neural

... • The simplified error backproagation algoritm doesn’t allow learning of long term dependencies • the backpropagation of the error signal is trucated at the context layer. • This makes computation simplier (no need to store the history of activations), and local in time • The calculated gradient for ...

... • The simplified error backproagation algoritm doesn’t allow learning of long term dependencies • the backpropagation of the error signal is trucated at the context layer. • This makes computation simplier (no need to store the history of activations), and local in time • The calculated gradient for ...

Knowledge Representation (and some more Machine Learning)

... Expert: Intelligent Systems and Their Applications ,vol. 11, no. 3, pp. 6475, June, 1996. (http://doi.ieeecomputersociety.org/10.1109/64.506755) ...

... Expert: Intelligent Systems and Their Applications ,vol. 11, no. 3, pp. 6475, June, 1996. (http://doi.ieeecomputersociety.org/10.1109/64.506755) ...

Exploring Artificial Neural Networks to discover Higgs at

... • The data was obtained from Rome ttbar AOD files • Once extracted, the weights were used to train the Neural Network ...

... • The data was obtained from Rome ttbar AOD files • Once extracted, the weights were used to train the Neural Network ...

Artificial intelligence

... • After the September 11, 2001 attacks there has been much renewed interest and funding for threat-detection AI systems, including machine vision research and datamining ...

... • After the September 11, 2001 attacks there has been much renewed interest and funding for threat-detection AI systems, including machine vision research and datamining ...

Catastrophic interference

Catastrophic Interference, also known as catastrophic forgetting, is the tendency of a artificial neural network to completely and abruptly forget previously learned information upon learning new information. Neural networks are an important part of the network approach and connectionist approach to cognitive science. These networks use computer simulations to try and model human behaviours, such as memory and learning. Catastrophic interference is an important issue to consider when creating connectionist models of memory. It was originally brought to the attention of the scientific community by research from McCloskey and Cohen (1989), and Ractcliff (1990). It is a radical manifestation of the ‘sensitivity-stability’ dilemma or the ‘stability-plasticity’ dilemma. Specifically, these problems refer to the issue of being able to make an artificial neural network that is sensitive to, but not disrupted by, new information. Lookup tables and connectionist networks lie on the opposite sides of the stability plasticity spectrum. The former remains completely stable in the presence of new information but lacks the ability to generalize, i.e. infer general principles, from new inputs. On the other hand, connectionst networks like the standard backpropagation network are very sensitive to new information and can generalize on new inputs. Backpropagation models can be considered good models of human memory insofar as they mirror the human ability to generalize but these networks often exhibit less stability than human memory. Notably, these backpropagation networks are susceptible to catastrophic interference. This is considered an issue when attempting to model human memory because, unlike these networks, humans typically do not show catastrophic forgetting. Thus, the issue of catastrophic interference must be eradicated from these backpropagation models in order to enhance the plausibility as models of human memory.