Exponential Family Distributions

... of which are qualitative and some quantitative. Annals of Statistics, 17:31–57, 1989. N. Lawrence, M. Milo, M. Niranjan, P. Rashbass, and S. Soullier. Reducing the variability in microarray image processing by Bayesian inference. Technical report, Department of Computer Science, University of Sheffi ...

... of which are qualitative and some quantitative. Annals of Statistics, 17:31–57, 1989. N. Lawrence, M. Milo, M. Niranjan, P. Rashbass, and S. Soullier. Reducing the variability in microarray image processing by Bayesian inference. Technical report, Department of Computer Science, University of Sheffi ...

Project Report: Investigating topographic neural map development

... (mean luminance) and local contrasts. The visual system would not be able to encode this broad range of information using a single fixed scale resolution range. An element of adaptability to various contrasts and intensity levels present in the stimulus is hardcoded into the architecture of the visu ...

... (mean luminance) and local contrasts. The visual system would not be able to encode this broad range of information using a single fixed scale resolution range. An element of adaptability to various contrasts and intensity levels present in the stimulus is hardcoded into the architecture of the visu ...

Theories of Language Processing

... programs have no built-in capacity to learn from their environment. Thus in most cases, these programs cannot ever know anything more about their world than what was built into them; as a result, they typically have neither the capacity to learn new information nor the capacity to learn through maki ...

... programs have no built-in capacity to learn from their environment. Thus in most cases, these programs cannot ever know anything more about their world than what was built into them; as a result, they typically have neither the capacity to learn new information nor the capacity to learn through maki ...

Relational Networks

... distinguish this morpheme from others? Answer: Other morphemes necessarily have different connections ...

... distinguish this morpheme from others? Answer: Other morphemes necessarily have different connections ...

Abstract Neuron { y

... Conclusions The logic underlying box-and-arrow- models is perfectly compatible with connectionist models. Connectionist principles augment the boxes and arrows with -- a mechanism for quantifying degree of damage -- mechanisms for error types and hence an explanation of the error patterns Implicati ...

... Conclusions The logic underlying box-and-arrow- models is perfectly compatible with connectionist models. Connectionist principles augment the boxes and arrows with -- a mechanism for quantifying degree of damage -- mechanisms for error types and hence an explanation of the error patterns Implicati ...

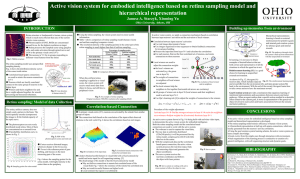

Active vision system for embodied intelligence based

... ● Local winners are used to adjust the connection weights ◘ the local winners are activated (e.g. the green one in layer N) ◘ The weights of connections to neighbors of local winner Fig. 8: The excitation of local winner are adjusted and its neighbors ◘ The local winners help the neighbors to fire t ...

... ● Local winners are used to adjust the connection weights ◘ the local winners are activated (e.g. the green one in layer N) ◘ The weights of connections to neighbors of local winner Fig. 8: The excitation of local winner are adjusted and its neighbors ◘ The local winners help the neighbors to fire t ...

Integrating Scheduling and Control Functions in Computer

... The job database is formatted to be utilized by the Neural Network System. The output of the network ranks the different scheduling heuristics available. Several neural networks have been trained using the results of simulation runs, taking into consideration the input feature space and ranking of p ...

... The job database is formatted to be utilized by the Neural Network System. The output of the network ranks the different scheduling heuristics available. Several neural networks have been trained using the results of simulation runs, taking into consideration the input feature space and ranking of p ...

Neural Pascal

... driving such a network will take advantage of the structuring of the net, or of the properties of neurons when performing calculations or when updating states of neurons. It seems highly desirable to translate this view of a network into an executable program as directly as possible with the actual ...

... driving such a network will take advantage of the structuring of the net, or of the properties of neurons when performing calculations or when updating states of neurons. It seems highly desirable to translate this view of a network into an executable program as directly as possible with the actual ...

artificial neural network circuit for spectral pattern recognition

... machine learning problems such as classification, image processing, etc. Unlike linear or logistic regression, ANNs can learn complex non-linear hypothesis for a large number of input features more efficiently [1]. The motivation behind neural networks was to have machines that could mimic the worki ...

... machine learning problems such as classification, image processing, etc. Unlike linear or logistic regression, ANNs can learn complex non-linear hypothesis for a large number of input features more efficiently [1]. The motivation behind neural networks was to have machines that could mimic the worki ...

Does computational neuroscience need new synaptic

... construction site, allows us to decide hours later which route to choose on the way back. In this example, the internal representation consists, first, of possible routes between home and work, second, the position and the cause of the traffic jam, and third, cause-dependent expectations about the d ...

... construction site, allows us to decide hours later which route to choose on the way back. In this example, the internal representation consists, first, of possible routes between home and work, second, the position and the cause of the traffic jam, and third, cause-dependent expectations about the d ...

View PDF - Advances in Cognitive Systems

... given the values of its children. For this purpose, each node will contain a classification learner. In principle, any supervised classification learner can be used within AN nodes. In this work, Artificial Neural Networks Rumelhart and Mcclelland (1986) (ANNs) are used. At the leaf nodes, raw input ...

... given the values of its children. For this purpose, each node will contain a classification learner. In principle, any supervised classification learner can be used within AN nodes. In this work, Artificial Neural Networks Rumelhart and Mcclelland (1986) (ANNs) are used. At the leaf nodes, raw input ...

Learning Datalog Programs from Input and Output

... In the paradigm of learning from interpretation transition, an example is a pair hI, Ji where I and J are interpretations (an interpretation is a set of propositional atoms). This learning task is, for a given set E of examples, to find a normal logic program P such that TP (I) = J for every hI, Ji ...

... In the paradigm of learning from interpretation transition, an example is a pair hI, Ji where I and J are interpretations (an interpretation is a set of propositional atoms). This learning task is, for a given set E of examples, to find a normal logic program P such that TP (I) = J for every hI, Ji ...

Document

... • Part I: Clean mathematical and algorithmic results: Galled-Trees, nearuniqueness, graph-theory lower bound, and the Decomposition theorem, Forest Theorem and the History Lower bound. • Part II: Practical computation of Lower and Upper bounds on the number of recombinations needed. Construction of ...

... • Part I: Clean mathematical and algorithmic results: Galled-Trees, nearuniqueness, graph-theory lower bound, and the Decomposition theorem, Forest Theorem and the History Lower bound. • Part II: Practical computation of Lower and Upper bounds on the number of recombinations needed. Construction of ...

Asynchronous state

... These correlations could be related to information processing, or they could limit the efficiency for (e.g.) sensory discrimination … How are correlations generated in cortical circuits? ...

... These correlations could be related to information processing, or they could limit the efficiency for (e.g.) sensory discrimination … How are correlations generated in cortical circuits? ...

Financial time series forecasting with machine learning techniques

... used, the forecasting time-frame, the input variables used, and the evaluation techniques employed. In regards to the employed machine learning technique, there seems to be a trend to use existing artificial neural network models which are enhanced with new training algorithms or combined with emerg ...

... used, the forecasting time-frame, the input variables used, and the evaluation techniques employed. In regards to the employed machine learning technique, there seems to be a trend to use existing artificial neural network models which are enhanced with new training algorithms or combined with emerg ...

Extended Liquid Computing in Networks of Spiking Neurons

... Many algorithms have been developed over the years, but we will not give more details about them (see [4]). Indeed, computations on attractors networks like RNNs show a certain number of limitations. First, a great number of attractor, i.e. a system of very high-dimensionality is needed to store inf ...

... Many algorithms have been developed over the years, but we will not give more details about them (see [4]). Indeed, computations on attractors networks like RNNs show a certain number of limitations. First, a great number of attractor, i.e. a system of very high-dimensionality is needed to store inf ...

Rule Insertion and Rule Extraction from Evolving Fuzzy

... EFuNNs are FuNN structures that evolve according to the ECOS principles [8]. EFuNNs adopt some known techniques from [6, 15, 16] and from other known NN techniques, but here all nodes in an EFuNN are created during (possibly one-pass) learning. The nodes representing MF (fuzzy label neurons) can be ...

... EFuNNs are FuNN structures that evolve according to the ECOS principles [8]. EFuNNs adopt some known techniques from [6, 15, 16] and from other known NN techniques, but here all nodes in an EFuNN are created during (possibly one-pass) learning. The nodes representing MF (fuzzy label neurons) can be ...

Masters Proposal Project

... of AHS in this province, in Stellenbosch in 1999, there have been further outbreaks, specifically in the Knysna/George area (Lord et al., 2005). This is cause for concern since such outbreaks could lead to legislation to restrict the movement of horses – especially race horses – countrywide, and cou ...

... of AHS in this province, in Stellenbosch in 1999, there have been further outbreaks, specifically in the Knysna/George area (Lord et al., 2005). This is cause for concern since such outbreaks could lead to legislation to restrict the movement of horses – especially race horses – countrywide, and cou ...

State-dependent computations - Frankfurt Institute for Advanced

... Traditional artificial neural networks, such as the perceptron13 and multi-layer perceptrons14, were designed to process static spatial patterns of inputs — for example, for the discrimination of handwritten characters — and the network structure therefore had no need to implicitly or explicitly inc ...

... Traditional artificial neural networks, such as the perceptron13 and multi-layer perceptrons14, were designed to process static spatial patterns of inputs — for example, for the discrimination of handwritten characters — and the network structure therefore had no need to implicitly or explicitly inc ...

Catastrophic interference

Catastrophic Interference, also known as catastrophic forgetting, is the tendency of a artificial neural network to completely and abruptly forget previously learned information upon learning new information. Neural networks are an important part of the network approach and connectionist approach to cognitive science. These networks use computer simulations to try and model human behaviours, such as memory and learning. Catastrophic interference is an important issue to consider when creating connectionist models of memory. It was originally brought to the attention of the scientific community by research from McCloskey and Cohen (1989), and Ractcliff (1990). It is a radical manifestation of the ‘sensitivity-stability’ dilemma or the ‘stability-plasticity’ dilemma. Specifically, these problems refer to the issue of being able to make an artificial neural network that is sensitive to, but not disrupted by, new information. Lookup tables and connectionist networks lie on the opposite sides of the stability plasticity spectrum. The former remains completely stable in the presence of new information but lacks the ability to generalize, i.e. infer general principles, from new inputs. On the other hand, connectionst networks like the standard backpropagation network are very sensitive to new information and can generalize on new inputs. Backpropagation models can be considered good models of human memory insofar as they mirror the human ability to generalize but these networks often exhibit less stability than human memory. Notably, these backpropagation networks are susceptible to catastrophic interference. This is considered an issue when attempting to model human memory because, unlike these networks, humans typically do not show catastrophic forgetting. Thus, the issue of catastrophic interference must be eradicated from these backpropagation models in order to enhance the plausibility as models of human memory.